As developers move beyond text chatbots to build truly conversational AI experiences, managing real-time WebRTC complexity becomes the primary challenge. Two frameworks have emerged as leaders in this space: Pipecat and LiveKit. Both aim to simplify the creation of low-latency voice agents, but they take fundamentally different approaches to achieve this goal.

As of December 2025, Pipecat (version 0.0.97) and LiveKit (version 1.9.7) represent the cutting edge of voice AI development. This comprehensive comparison will help you understand their architectural differences, performance characteristics, and which framework best fits your project’s specific requirements.

Understanding the frameworks: core architectures

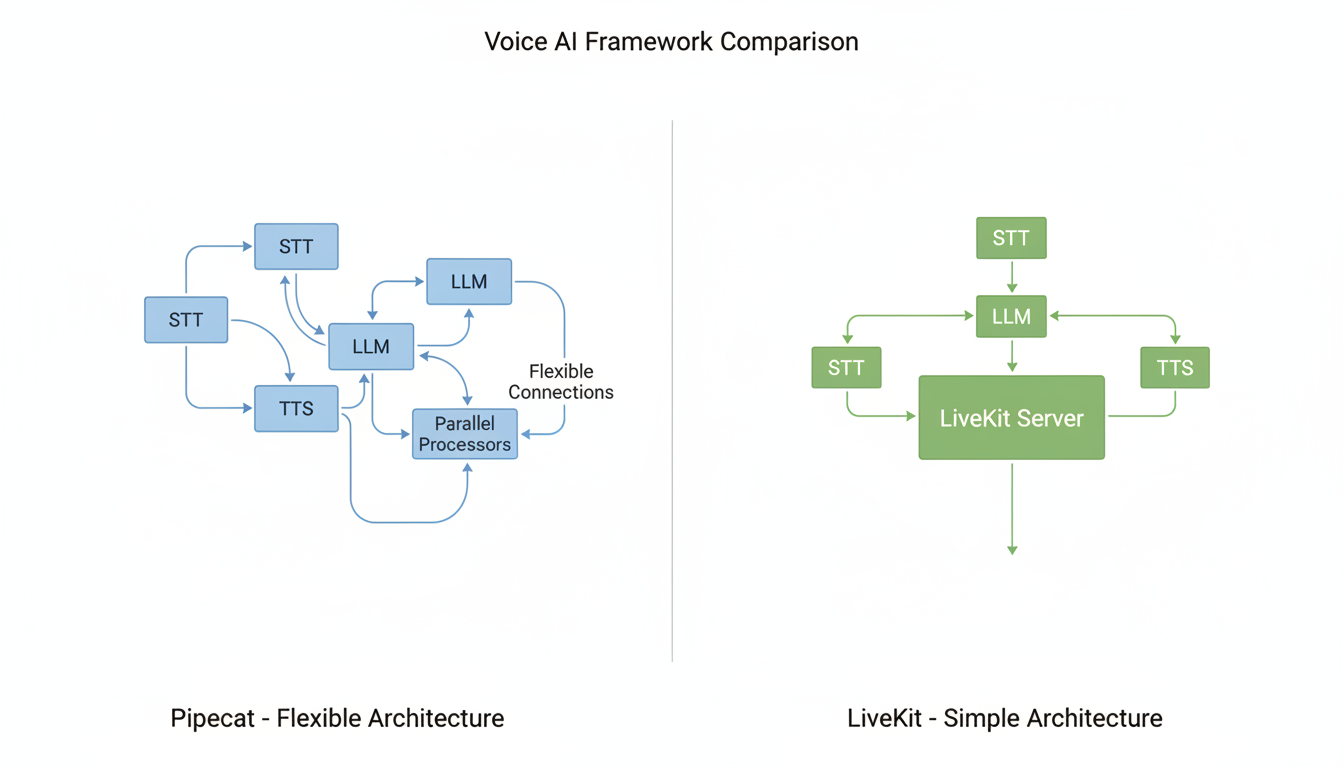

Pipecat and LiveKit approach voice AI from different philosophical foundations. Pipecat prioritizes flexibility and customization, while LiveKit emphasizes simplicity and scalability.

Pipecat: The flexible pipeline approach

Pipecat is an open-source Python framework designed for building real-time voice and multimodal conversational agents. Its core strength lies in its flexible pipeline architecture where developers can chain together processors that handle different aspects of audio processing.

- Complex pipeline support: Unlike simple linear pipelines, Pipecat supports parallel processing paths and complex data flows

- Extensive component library: Over 100 pre-built services including STT, TTS, LLM integrations, vision processing, and custom tools

- Transport flexibility: Supports Daily.co, LiveKit (audio-only), Twilio, and native WebRTC transports

- Smart turn detection: Uses Pipecat’s own Smart TurnDetection v3 model for natural conversation flow

LiveKit: The streamlined approach

LiveKit Agents provides a Python and Node.js framework focused on simplicity and production scalability. It follows a more opinionated architecture that prioritizes ease of use and reliable performance.

- Linear pipeline design: Standard STT → LLM → TTS flow with minimal configuration complexity

- Built-in WebRTC infrastructure: Leverages LiveKit’s mature real-time communication platform

- Automatic scaling: Agents automatically spawn when users join rooms, optimized for high-concurrency scenarios

- Hardware-accelerated VAD: Custom voice activity detection optimized for real-time performance

Technical comparison: features and capabilities

When evaluating Pipecat vs LiveKit, several technical factors determine which framework better suits your needs.

| Feature | Pipecat | LiveKit |

|---|---|---|

| Latest Version (Dec 2025) | 0.0.97 | 1.9.7 |

| Primary Language | Python | Python, Node.js |

| Architecture Style | Flexible pipelines | Linear processing |

| Turn Detection | Smart TurnDetection v3 | Custom open-weights model |

| Multimodal Support | Audio + Video + Images | Audio + Video |

| Transport Options | Multiple platforms | LiveKit platform only |

| Learning Curve | Moderate to steep | Gentle to moderate |

| Production Scalability | Good (requires orchestration) | Excellent (built-in scaling) |

Performance and latency considerations

Both frameworks prioritize low-latency performance, but achieve it through different means. Pipecat’s latest version (0.0.97) includes significant improvements to streaming synthesis latency, particularly with services like GeminiTTSService which now uses Google Cloud’s streaming API for better performance.

LiveKit benefits from its mature WebRTC infrastructure, which handles millions of concurrent calls for platforms like ChatGPT’s Advanced Voice Mode. Its turn detection model has been optimized for accuracy across multiple languages, with measurable improvements in recent releases.

Development experience comparison

The developer experience differs significantly between the two frameworks. Pipecat requires more explicit configuration but offers greater control, while LiveKit provides sensible defaults that work well out of the box.

# Pipecat example (more verbose but flexible)

transport = DailyTransport(room_url, DailyParams(vad_analyzer=SileroVADAnalyzer()))

pipeline = Pipeline([

transport.input(),

DeepgramSTTService(),

OpenAILLMService(),

CartesiaTTSService(),

transport.output()

])# LiveKit example (cleaner API with defaults)

agent = VoicePipelineAgent(

vad=silero.VAD.load(),

stt=deepgram.STT(),

llm=openai.LLM(),

tts=cartesia.TTS(),

)Use case scenarios: when to choose each framework

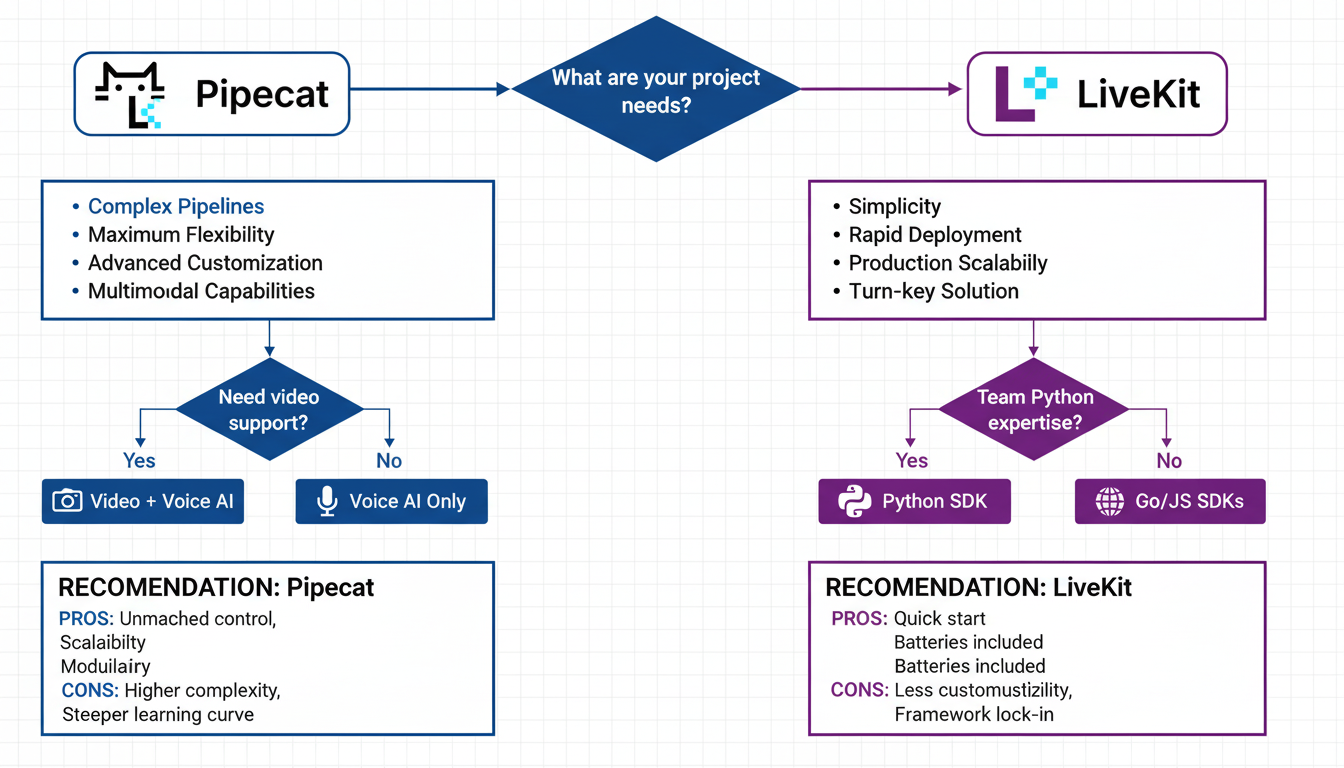

Your specific project requirements will determine whether Pipecat or LiveKit is the better choice.

Choose Pipecat when:

- Maximum flexibility needed: You need custom pipeline logic or parallel processing paths

- Multimodal requirements: Your agent needs to process video, images, or multiple data streams

- Existing infrastructure: You’re already using Daily.co, Twilio, or other WebRTC platforms

- Advanced customization: You need fine-grained control over every aspect of the conversation flow

- Research or experimentation: You’re building prototypes that require rapid iteration on pipeline design

Choose LiveKit when:

- Production scalability: You need to handle high volumes of concurrent conversations

- Rapid deployment: You want a turn-key solution with minimal configuration

- Team with mixed expertise: Your team includes developers with varying levels of WebRTC experience

- Video-first applications: Your use case primarily involves video interactions

- Enterprise reliability: You need proven infrastructure that powers major platforms

Recent developments and future outlook

Both frameworks continue to evolve rapidly. Pipecat’s recent releases (0.0.96 and 0.0.97) have focused on improving error handling, adding new service integrations, and enhancing text aggregation capabilities. The framework has matured significantly with better interruption handling and more robust pipeline management.

LiveKit has strengthened its position as a production-ready platform with version 1.9.x series focusing on performance optimizations, better SIP integration, and improved data channel reliability. The recent Agent Builder launch (November 2025) demonstrates LiveKit’s commitment to making voice AI more accessible to non-technical users.

Implementation considerations

Cost and licensing

Both frameworks are open-source with commercial-friendly licenses. Pipecat uses the Apache 2.0 license, while LiveKit uses the Apache 2.0 license for its agent framework. The primary cost considerations come from the underlying services (LLM APIs, STT/TTS services) and infrastructure hosting.

Team skills and learning curve

Pipecat requires stronger Python async programming skills and understanding of pipeline architecture. LiveKit has a gentler learning curve but may feel restrictive for teams wanting maximum customization. Consider your team’s existing expertise when choosing between the frameworks.

Deployment and operations

LiveKit offers smoother deployment through its cloud platform and built-in scaling mechanisms. Pipecat provides more deployment flexibility but requires more operational overhead for production scaling. Both frameworks benefit from containerization and modern DevOps practices.

Conclusion: making the right choice

The choice between Pipecat and LiveKit ultimately comes down to your specific requirements:

- Choose Pipecat if you need maximum flexibility, custom pipeline logic, multimodal capabilities, or are already invested in other WebRTC platforms

- Choose LiveKit if you prioritize production scalability, rapid deployment, video-first applications, or want a turn-key solution with proven enterprise reliability

Both frameworks represent excellent choices for building conversational AI applications in 2025. Pipecat’s flexibility makes it ideal for complex, customized solutions, while LiveKit’s simplicity and scalability make it perfect for production deployments requiring reliability and performance at scale.

As the voice AI landscape continues to evolve, both frameworks are likely to incorporate more speech-to-speech model support and further simplify the development experience. The best approach is to prototype with both frameworks using your specific use case requirements to determine which aligns better with your project goals and team capabilities.