If you’re working with OpenAI’s latest AI models, you’ve likely encountered the question: should you stick with GPT-5 or upgrade to GPT-5.1? Released on November 12, 2025, GPT-5.1 represents OpenAI’s most significant refinement of their flagship model since GPT-5’s launch in August 2025. This isn’t just a minor update—it’s a fundamental shift in how AI models approach reasoning, conversation, and enterprise deployment.

What GPT-5.1 brings to the table

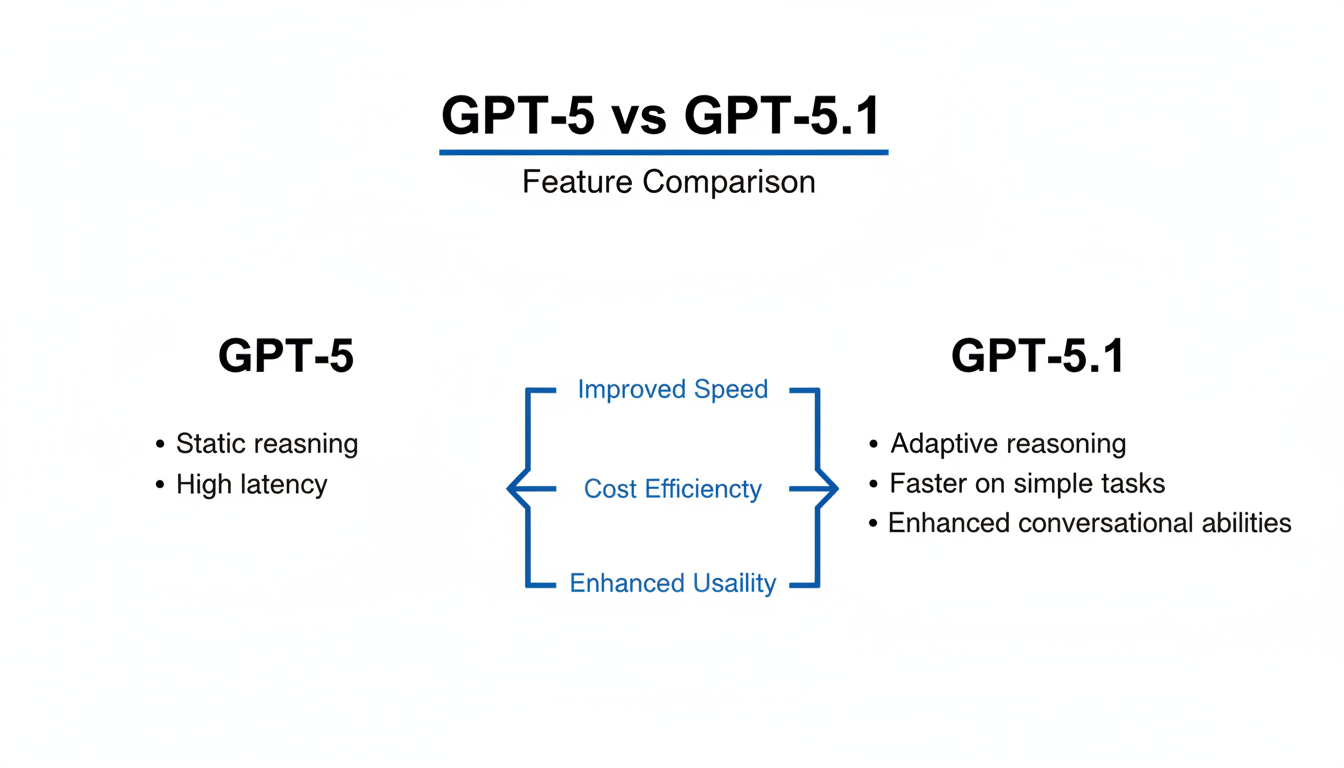

GPT-5.1 introduces two distinct variants that fundamentally change how developers and enterprises interact with AI:

- GPT-5.1 Instant: Optimized for speed and conversational fluency

- GPT-5.1 Thinking: Enhanced for complex reasoning tasks

The most significant advancement is adaptive reasoning—GPT-5.1 can now dynamically decide when to engage in deep thinking versus providing immediate responses. This means faster answers for simple queries while maintaining thorough analysis for complex problems.

Performance benchmarks: Where GPT-5.1 excels

According to LLM Stats’ December 2025 analysis, GPT-5.1 shows measurable improvements across several key benchmarks:

| Benchmark | GPT-5 Score | GPT-5.1 Score | Improvement |

|---|---|---|---|

| GPQA | 85.7% | 88.1% | +2.4% |

| MMMU | 84.2% | 85.4% | +1.2% |

| SWE-Bench Verified | 74.9% | 76.3% | +1.4% |

| FrontierMath | 26.3% | 26.7% | +0.4% |

| Tau2 Airline | 62.6% | 67.0% | +4.4% |

While GPT-5 maintains slight advantages in AIME 2025 (94.6% vs 94.0%) and Tau2 Retail (81.1% vs 77.9%), GPT-5.1 demonstrates superior performance in complex reasoning and specialized domain tasks.

Conversational improvements: More human, less robotic

One of the most noticeable differences between GPT-5 and GPT-5.1 is conversational quality. OpenAI specifically addressed user feedback requesting “AI that’s not only smart, but also enjoyable to talk to.” GPT-5.1 Instant defaults to a warmer, more conversational tone while maintaining clarity and usefulness.

In practice, this means GPT-5.1 responds with more empathy and natural language patterns. For customer service applications, this translates to more authentic interactions that feel less like talking to a machine and more like conversing with a knowledgeable human.

Enterprise-ready features that matter

GPT-5.1 introduces several features specifically designed for enterprise deployment:

- Extended prompt caching: Up to 24-hour reuse of expensive prompt segments

- Developer tools: apply_patch for deterministic code edits and shell access for system interactions

- Model choice and auto-routing: Intelligent task distribution between Instant and Thinking modes

These features address common enterprise pain points around cost predictability, integration complexity, and workflow optimization. For high-volume operations like insurance underwriting or purchase order processing, the caching alone can significantly reduce token consumption and operational costs.

Pricing and cost efficiency

As of December 2025, GPT-5.1 maintains the same pricing structure as GPT-5:

| Model | Input tokens | Output tokens | Cached input |

|---|---|---|---|

| GPT-5 | $1.25/1M | $10.00/1M | $0.125/1M |

| GPT-5.1 | $1.25/1M | $10.00/1M | $0.125/1M |

While the base pricing remains identical, GPT-5.1’s efficiency improvements translate to actual cost savings. The adaptive reasoning feature means you’re not paying for unnecessary computation on simple tasks, while the extended caching reduces token consumption for repetitive workflows.

When to upgrade: Practical considerations

The decision to upgrade depends largely on your specific use case:

- Upgrade immediately if: You’re building customer-facing applications, need cost-efficient high-volume processing, or require more natural conversational AI

- Consider waiting if: You have stable production systems with GPT-5 that meet your current needs without issues

- Test thoroughly if: You rely on specific GPT-5 behaviors that might change with the new model

OpenAI provides a three-month legacy period for GPT-5, allowing gradual transition and testing. This window expires in February 2026, giving teams ample time to evaluate GPT-5.1 against their specific requirements.

Real-world performance differences

In practical testing, users report noticeable improvements in several areas:

- Response quality: GPT-5.1 provides clearer explanations with less jargon

- Speed variance: GPT-5.1 Thinking is roughly twice as fast on simple tasks and twice as slow on complex problems compared to GPT-5

- Instruction following: GPT-5.1 more reliably answers the exact question asked

For developers, the new apply_patch and shell tools represent a significant advancement. These capabilities move GPT-5.1 beyond code generation into code modification and validation—essential for CI/CD integration and production workflows.

The bottom line: Is GPT-5.1 worth the upgrade?

GPT-5.1 represents a meaningful evolution rather than a revolutionary leap. The improvements are substantial where they matter most: conversational quality, cost efficiency, and enterprise readiness. For most teams, the upgrade offers clear benefits with minimal disruption.

The key advantage isn’t just raw intelligence—it’s intelligent resource allocation. GPT-5.1’s adaptive reasoning ensures you’re getting the right level of computation for each task, making it both smarter and more cost-effective than its predecessor.

As AI continues to mature, the distinction between powerful models and practical models becomes increasingly important. GPT-5.1 bridges this gap effectively, offering enterprise-grade reliability without sacrificing the cutting-edge capabilities that made GPT-5 impressive in the first place.

For teams building the next generation of AI applications, GPT-5.1 provides the tools needed to deploy at scale with confidence. The question isn’t whether to upgrade, but when—and for most use cases, the answer is sooner rather than later.