Developers face a pivotal choice in open-source AI: Mistral Large 3, the new flagship from Mistral AI released December 2, 2025, or Meta’s established Llama 3 series, with Llama 3.1 405B as its powerhouse from July 2024. As of December 2025, Mistral Large 3’s granular Mixture-of-Experts (MoE) architecture—41B active parameters out of 675B total—delivers frontier-level multimodal and multilingual performance under a permissive Apache 2.0 license. Llama 3 excels in raw reasoning scale with its dense 405B setup. This guide compares benchmarks, efficiency, implementation, and use cases to help you pick the right model for your project, whether edge deployment or complex agents.

Model specifications and architecture

Mistral Large 3 introduces a sparse MoE design optimized for efficiency, activating only 41B parameters per inference while supporting a massive 256K context window and native vision capabilities. Trained on 3,000 NVIDIA H200 GPUs, it’s available in base and instruct variants on Hugging Face. In contrast, Llama 3.1 405B uses a dense transformer with 128K context, focusing on text-only reasoning but extended in Llama 3.2/3.3 variants for multimodality in smaller sizes.

| Feature | Mistral Large 3 (Dec 2025) | Llama 3.1 405B (Jul 2024) |

|---|---|---|

| Parameters | 41B active / 675B total (MoE) | 405B dense |

| Context Window | 256K tokens | 128K tokens |

| Multimodal | Yes (text + vision) | No (text-only; vision in 3.2) |

| License | Apache 2.0 | Llama 3.1 Community License |

| API Pricing (input/output per 1M tokens) | $0.40 / $2 (Medium 3 ref) | $1 / $1.8 (est.) |

The MoE in Mistral enables lower latency on standard hardware, ideal for enterprise edge use. Llama’s density shines in compute-heavy reasoning but demands more VRAM.

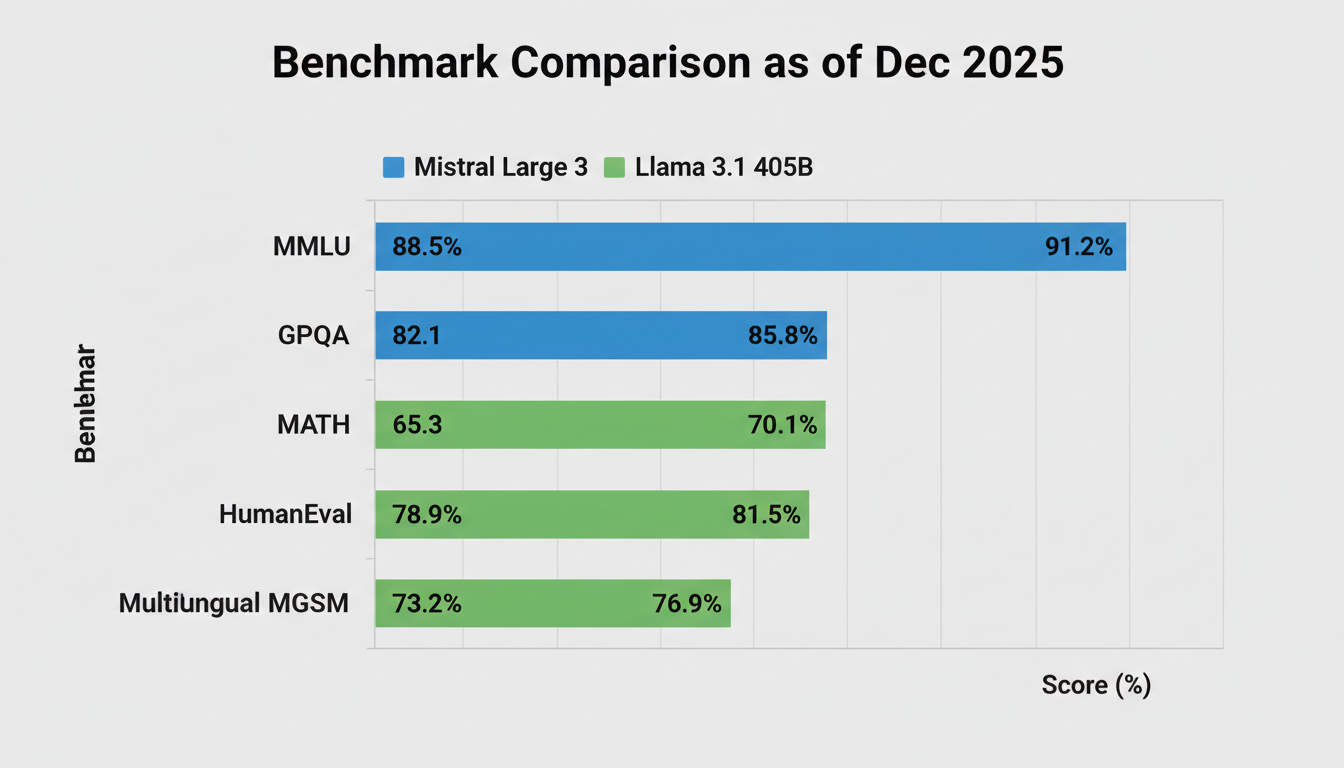

Performance benchmarks

Early benchmarks position Mistral Large 3 at #2 on LMSYS Arena (non-reasoning, 1418 Elo), excelling in multilingual tasks (40+ languages) and vision. Llama 3.1 405B leads in math (MATH: 73.9%) and coding (HumanEval: 89%). Mistral edges efficiency metrics, producing fewer tokens for similar outputs.

Data from Mistral.ai and Artificial Analysis (Dec 2025). Mistral Large 3 matches closed models like GPT-4o in non-English prompts.

Efficiency and cost for developers

Mistral’s MoE reduces active compute by 6x vs dense models, running on 8x H100s via vLLM. Llama 3.1 405B requires massive clusters but offers distillation potential. API costs favor Mistral for production: Ministral 3 series runs on laptops/drones. Both support quantization (FP8/4-bit).

# Example: Inference with Mistral Large 3 (Hugging Face)

from transformers import pipeline

generator = pipeline("text-generation", model="mistralai/Mistral-Large-3-675B-Instruct-2512")

output = generator("Explain MoE architecture", max_length=200)

print(output)Fine-tune Mistral with Unsloth for 2x speed. Llama needs more GPU hours but integrates seamlessly with Meta’s Llama Stack.

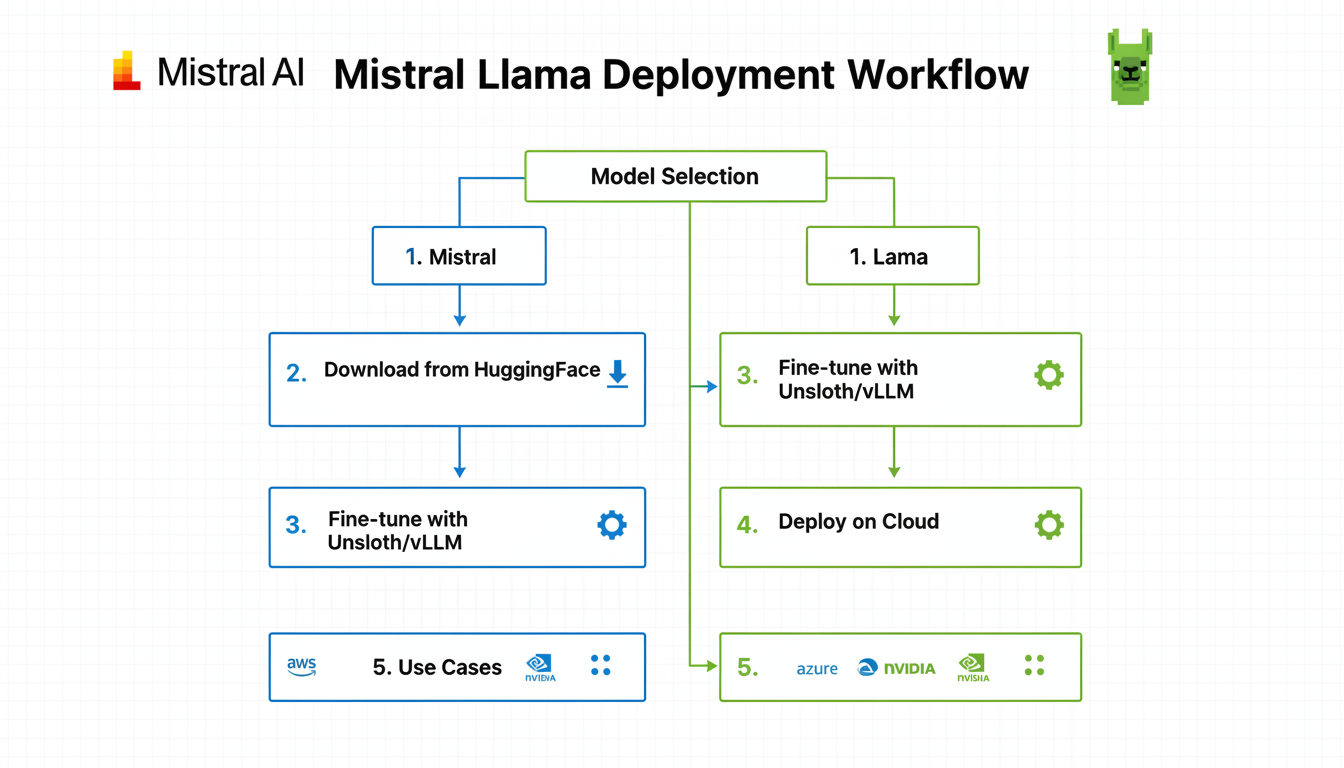

Practical implementation guide

Download both from Hugging Face. Mistral deploys via NVIDIA NIM/AWS Bedrock; Llama via Groq/Databricks. For agents, Mistral’s tool-calling and vision suit RAG pipelines.

Use vLLM for both: pip install vllm; vllm serve mistralai/Mistral-Large-3. Test latency on your hardware—Mistral wins on cost-efficiency.

Use cases and recommendations

Choose Mistral Large 3 for multilingual chatbots, document AI, edge robotics (Ministral), or multimodal apps—its 256K context and Apache license enable full customization. Opt for Llama 3.1 405B in math-heavy (finance/science) or long-reasoning agents where scale trumps efficiency. Hybrid: Distill Llama knowledge into Mistral.

- Edge/low-cost: Ministral 3 > Llama 3.2 1B/3B

- Reasoning: Llama 3.1 405B > Mistral Large 3

- Multimodal enterprise: Mistral Large 3

"Mistral Large 3 debuts at #2 on LMSYS Arena, parity with top open models."

Mistral.ai, Dec 2025

Conclusion

Mistral Large 3 edges Llama 3 in efficiency, multimodality, and permissiveness for 2025 projects, especially non-English/edge. Llama 3.1 405B remains king for pure reasoning power. Start with Mistral for most developer needs—test via Hugging Face demos. Monitor updates; both evolve rapidly. Download today and benchmark your workload for the win.