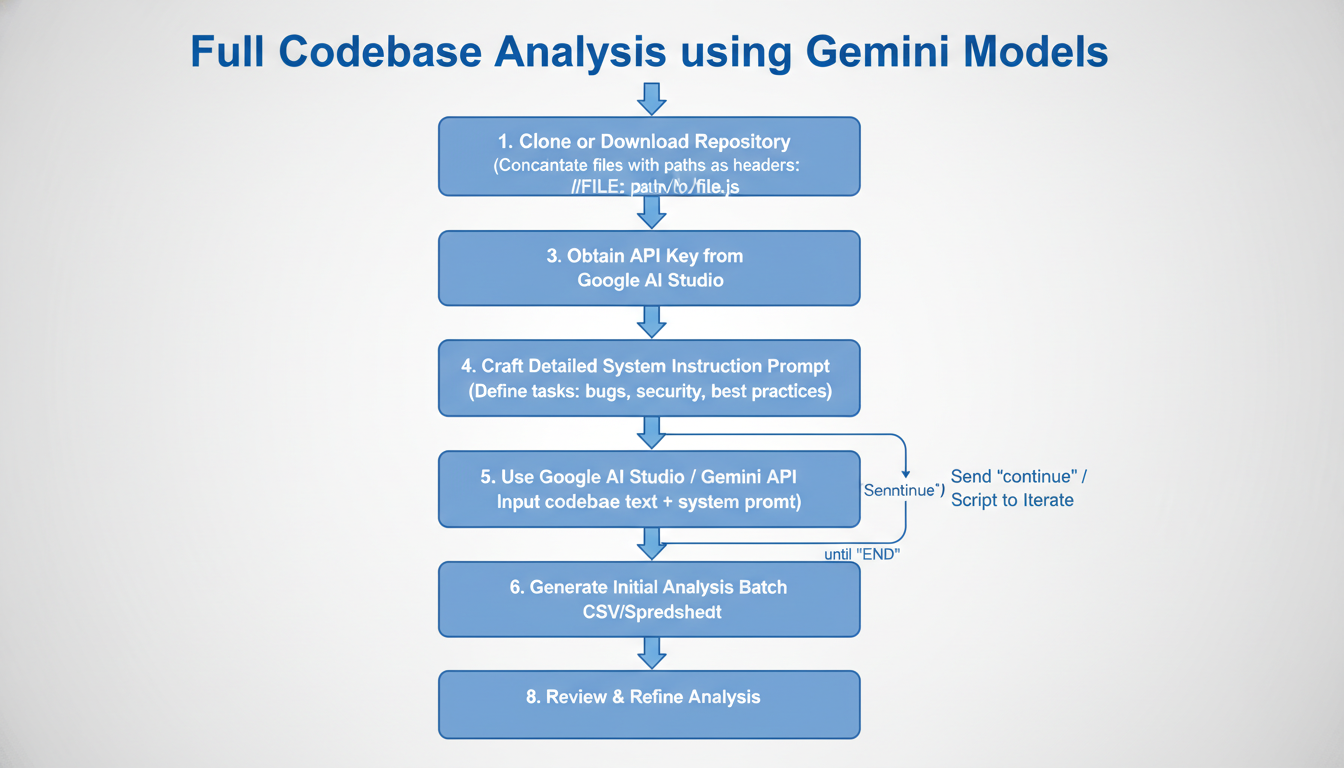

Struggling to onboard to a massive new codebase or hunt down elusive bugs across hundreds of files? Traditional tools like linters often miss the big picture, forcing you to piece together insights manually. Enter Gemini models from Google, with their massive context windows up to 1 million tokens or more. As of November 2025, Gemini 1.5 Pro has been retired, but its successors like stable Gemini 2.5 Pro (released June 2025) and preview Gemini 3 Pro (November 2025) carry the torch with equal or better long-context prowess. This guide walks you through analyzing an entire repository in one prompt, uncovering issues, generating docs, and boosting your dev workflow.

Why use Gemini for full codebase analysis

Modern codebases span thousands of lines across frontend, backend, configs, and tests. Gemini’s long context lets you feed it everything at once—no chunking or RAG hacks needed. Gemini 2.5 Pro excels at reasoning over code, spotting security flaws, anti-patterns, and optimization opportunities with high accuracy.

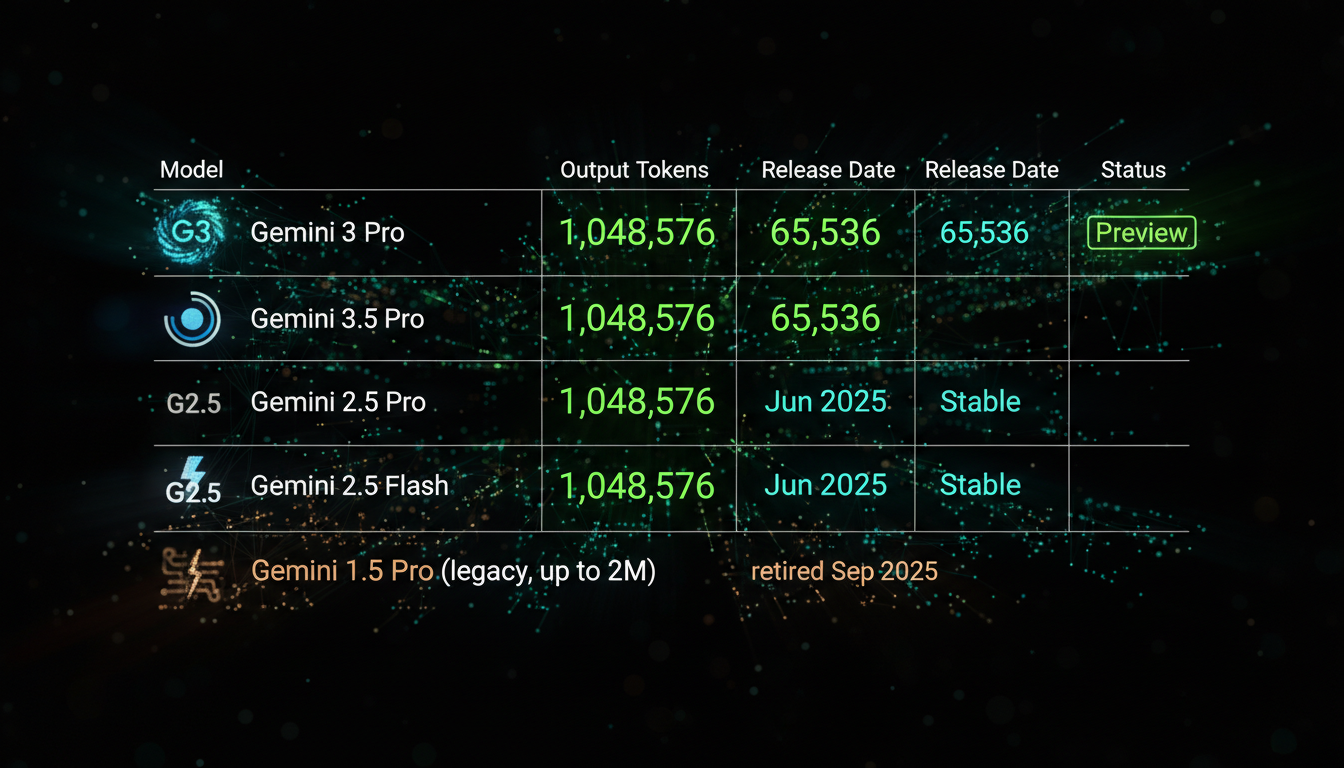

Key specs as of November 2025:

| Model | Input Tokens | Output Tokens | Release | Best For |

|---|---|---|---|---|

| Gemini 3 Pro (preview) | 1,048,576 | 65,536 | Nov 2025 | Advanced reasoning, agentic tasks |

| Gemini 2.5 Pro (stable) | 1,048,576 | 65,536 | Jun 2025 | Code analysis, complex repos |

| Gemini 2.5 Flash (stable) | 1,048,576 | 65,536 | Jun 2025 | Fast scans, high throughput |

| Gemini 1.5 Pro (legacy) | Up to 2M | Varies | Retired Sep 2025 | N/A |

Access via free Google AI Studio (limited quotas) or paid Vertex AI API. Start with AI Studio for testing—it’s point-and-click with folder uploads now supported.

Step 1: Prepare your codebase as a single text file

Dump your repo into one massive text file. Exclude binaries/images; focus on .js, .py, .yaml, etc. Use this Windows batch script (adapt for Linux/Mac):

@echo off

cd /d C:\repo

del \output\allfiles.txt

echo //Repository base directory: "%CD%" >> \output\allfiles.txt

echo(>> \output\allfiles.txt

for /r %%a in (*.js *.py *.ts *.yaml *.json *.md) do (

echo //$$FILE$$: "%%a" >> \output\allfiles.txt

type "%%a" >> \output\allfiles.txt

echo( >> \output\allfiles.txt

)

start \output\allfiles.txt

@echo Done!This prefixes each file with //$FILE$: path/to/file.js so Gemini knows the structure. For large repos (>500k tokens), split by frontend/backend. Check token count via Gemini API docs.

Token estimation tip

1 token ≈ 4 chars. A 1M token file handles ~750k words—most repos fit. Use Python SDK to count precisely.

Step 2: Get access and craft your system prompt

Head to Google AI Studio, sign in, grab a free API key. Select gemini-2.5-pro (or gemini-3-pro-preview for cutting-edge).

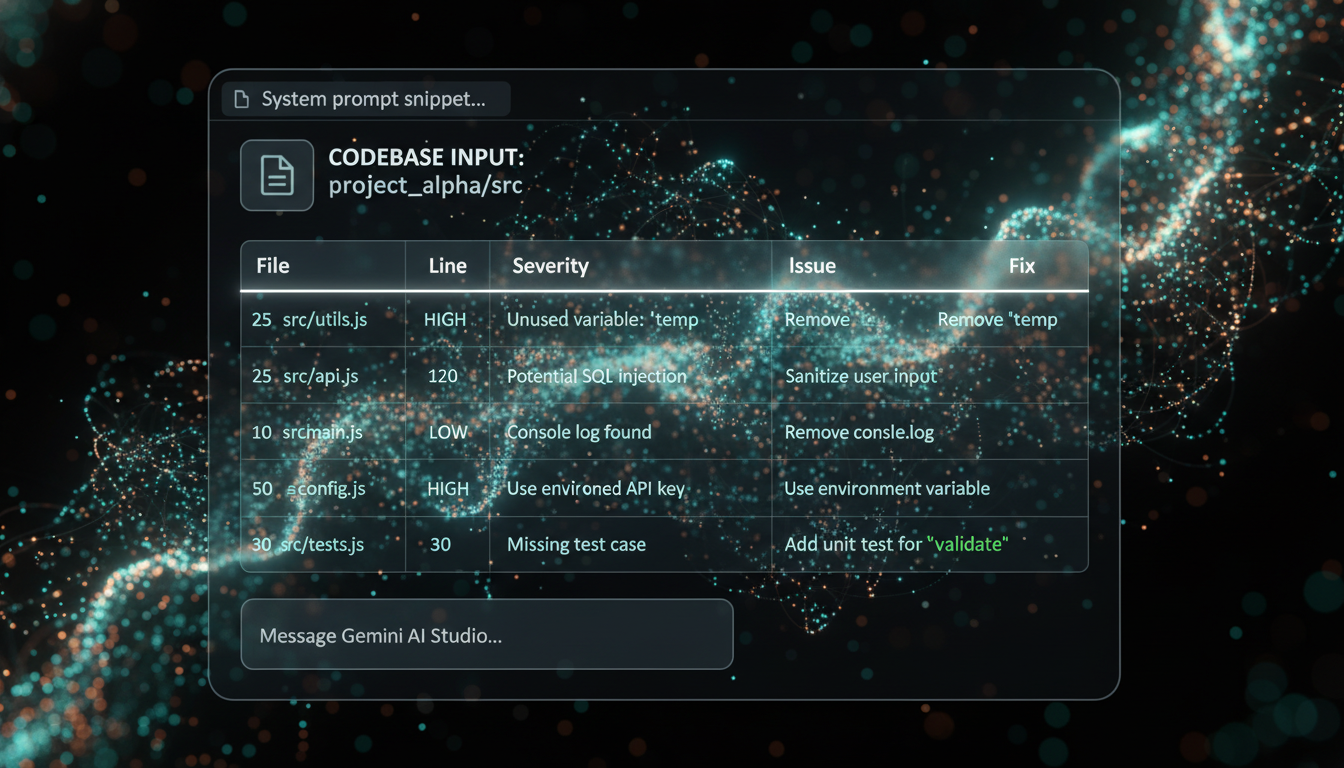

System prompt is key—define role, tasks, output format:

You are a senior code reviewer with 20+ years in secure, scalable software.

Analyze the FULL codebase below. Identify:

- Security vulnerabilities (OWASP Top 10)

- Bugs/error-prone code

- Performance issues

- Best practice violations

- Missing tests/docs

Output in Markdown table batches (max 50 issues/batch):

| # | File | Lines | Severity (High/Med/Low) | Issue | Suggested Fix |

End with | END | when exhaustive. If more, say "Continue for next batch."Paste your allfiles.txt into user prompt. Set temperature=0, block none safety.

Step 3: Run the analysis

- Paste prompt + codebase. Hit submit.

- Review first batch. Type "Continue" until | END |.

- Copy all tables.

For automation, use this Colab-ready Python script (install google-generativeai):

import google.generativeai as genai

import csv

genai.configure(api_key='YOUR_API_KEY')

model = genai.GenerativeModel('gemini-2.5-pro', system_instruction=SYSTEM_PROMPT)

chat = model.start_chat()

response = chat.send_message(open('allfiles.txt').read())

issues = []

while True:

lines = response.text.split('\n')

batch = [line for line in lines if line.strip() and '|' in line and not line.startswith('|--')]

issues.extend(batch)

if '| END |' in response.text:

break

response = chat.send_message('Continue.')

with open('issues.csv', 'w') as f:

writer = csv.writer(f)

for issue in issues:

writer.writerow([cell.strip() for cell in issue.split('|')])

print('Analysis complete: issues.csv')Costs: ~$3.50/1M input tokens for 2.5 Pro (check pricing). Cache context in Vertex AI for repeats.

Advanced tips and best practices

- Prioritize: First run for High severity only, tweak prompt.

- Custom tasks: Generate UML diagrams, API docs, migration plans.

- API integration: Vertex AI for production (higher quotas, caching).

- Validate: Cross-check with SonarQube; Gemini spots contextual issues tools miss.

- Limits: 1M tokens max; compress whitespace if needed.

"Gemini 2.5 Pro analyzed my 200k-line monorepo in minutes, flagging 150+ issues Sonar missed." —Developer testimonial, 2025.

Adapted from real user reports

Handle results and next steps

Import CSV to Sheets. Sort by severity/file. Bulk-apply fixes with IDE multi-cursor or GitHub Copilot.

Key takeaways: Prep codebase (5 mins), prompt engineering (10 mins), analyze (varies by size). Scale to CI/CD via API. Experiment in AI Studio today—unlock codebase superpowers.