NVIDIA has unveiled its groundbreaking Vera Rubin platform, marking a significant leap in AI hardware innovation. Announced during CES 2026, this next-generation AI supercomputing platform promises to redefine performance benchmarks and accelerate AI development across industries. With production already underway, the Rubin platform introduces architectural breakthroughs that could reshape the AI landscape in 2026 and beyond.

Platform Overview: Five Key Innovations

The Vera Rubin platform represents NVIDIA’s most ambitious AI hardware initiative yet, featuring:

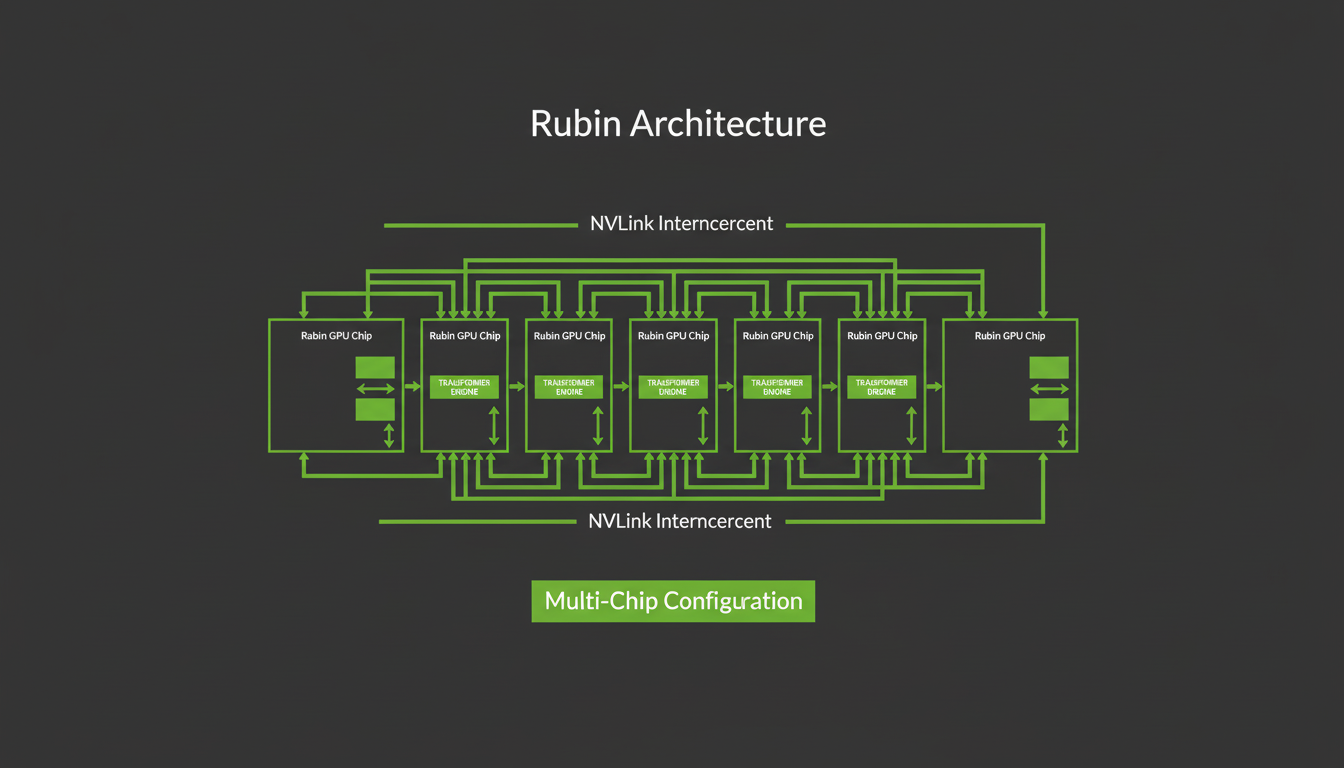

- Next-gen NVLink interconnect technology for ultra-fast chip-to-chip communication

- Advanced Transformer Engine optimized for large language models

- Multi-chip configuration with 144 total chips in the flagship NVL144 variant

- Energy-efficient architecture reducing training costs by up to 40%

- Seamless integration with NVIDIA’s AI software stack

Production Timeline and Industry Impact

During his CES 2026 keynote, NVIDIA CEO Jensen Huang confirmed that Vera Rubin chips are now in full production. This rapid development cycle follows the company’s September 2025 announcement of the Rubin CPX variant designed for massive-context inference. The platform’s six-chip lineup, including the high-end NVL144 and NVL72 variants, will begin shipping in Q1 2026 with volume production ramping through 2027.

“The transition to Vera Rubin represents the most significant performance jump we’ve ever delivered between generations,” said Huang. Early benchmarks suggest up to 5x faster training times for complex AI models compared to the previous Blackwell architecture.

Strategic Implications for AI Development

The platform’s introduction comes at a critical time for AI development, addressing three key industry challenges:

- Cost Efficiency: Energy consumption reductions could save data centers millions in operational costs

- Scalability: Multi-chip configurations enable training of trillion-parameter models without compromising performance

- Development Speed: Tight integration with NVIDIA’s software stack reduces deployment time for enterprise AI solutions

Competitors are already responding to NVIDIA’s accelerated innovation cycle. AMD announced its rival Instinct MI325X platform, while Intel confirmed its Gaudi 4 AI accelerator will ship in Q2 2026. However, NVIDIA’s early production advantage gives it a significant head start in the race for AI dominance.

Looking Ahead

With Vera Rubin now in production, the AI industry faces a pivotal transition period. Enterprises must evaluate their hardware roadmaps while researchers prepare to leverage the platform’s capabilities for next-generation model training. As data centers begin integrating Rubin systems in Q2 2026, the true measure of its impact will emerge in real-world applications ranging from scientific discovery to enterprise automation.