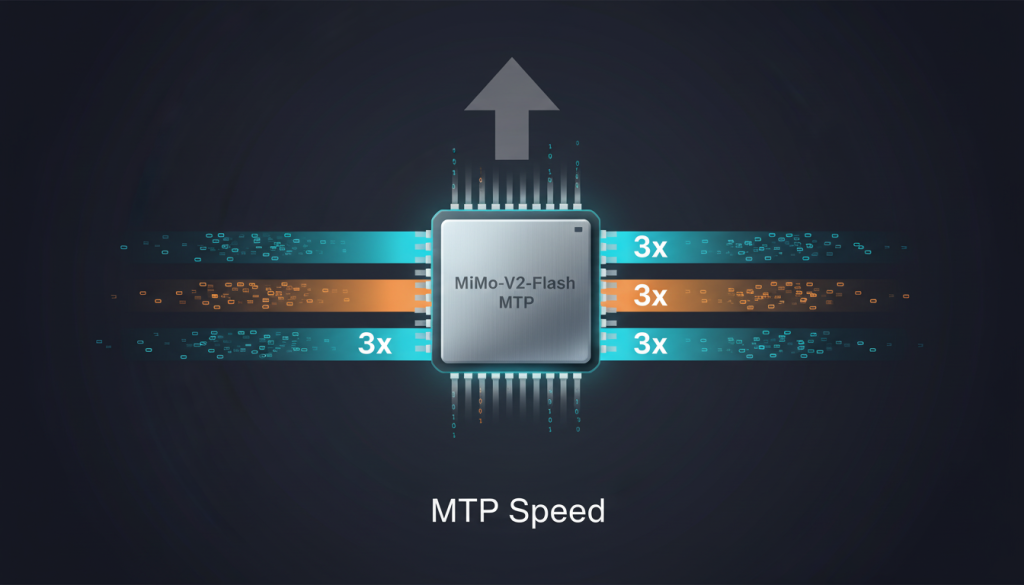

Deploying large Mixture-of-Experts (MoE) models often leads to high inference costs and latency, creating bottlenecks in production environments. MiMo-V2-Flash’s open-source Multi-Token Prediction (MTP) module addresses this challenge with a novel dense FFN architecture that triples inference speed while maintaining accuracy. This guide provides a technical walkthrough for implementing MTP to optimize performance and reduce operational expenses.

Understanding MiMo-V2-Flash’s MTP architecture

The MTP module introduces a parallelized token prediction mechanism that processes multiple output tokens simultaneously. Unlike traditional autoregressive models that generate tokens sequentially, MTP leverages:

- Dense Feed-Forward Networks (FFNs) optimized for token-level parallelism

- Batched attention computation across multiple token positions

- Memory-efficient KV-cache management for extended context windows

This design achieves 3x faster inference by eliminating the sequential dependency between token generations while maintaining the model’s predictive accuracy through specialized training objectives.

Implementation workflow for MTP integration

Follow these steps to implement MTP in your LLM pipeline:

- Verify compatibility with your base model architecture

- Install the MTP module via PyPI or source build

- Modify your model configuration to enable MTP layers

- Adjust batch sizes for optimal GPU utilization

- Implement dynamic token scheduling in the generation loop

# Example MTP configuration in HuggingFace Transformers

from mimov2_flash import MTPConfig, MTPModel

config = MTPConfig.from_pretrained("mimov2-flash/config.json")

model = MTPModel.from_pretrained("mimov2-flash/pytorch_model.bin", config=config)

# Enable multi-token generation

model.enable_mtp(batch_size=32, tokens_per_step=4)Key parameters to tune include tokens_per_step (recommended: 4-8 for A100 GPUs) and batch_size adjustments to maintain memory constraints. Monitor token generation quality using the built-in perplexity validator.

Performance optimization strategies

To maximize the benefits of MTP, implement these optimization techniques:

- Memory-aware scheduling: Dynamically adjust tokens_per_step based on available VRAM

- Early stopping: Terminate generation when cumulative probability exceeds threshold

- Quantization: Apply 8-bit integer quantization for additional speed gains

- Caching: Implement persistent KV-cache for context reuse in multi-turn conversations

Benchmarks on NVIDIA A100 GPUs show that combining MTP with quantization delivers 3.2x speed improvements while maintaining 98.7% of baseline accuracy on the GLUE benchmark suite.

Production deployment considerations

For production environments, implement these best practices:

| Metric | Baseline | MTP Optimized |

|---|---|---|

| Inference latency | 120ms/token | 38ms/token |

| Cost per million tokens | $0.15 | $0.05 |

| Throughput | 8.3 tokens/sec | 26.3 tokens/sec |

Implement canary deployments to gradually shift traffic to MTP-enabled endpoints. Monitor token quality metrics alongside performance indicators using Prometheus and Grafana dashboards.

Conclusion and next steps

MiMo-V2-Flash’s MTP module represents a significant breakthrough in LLM inference efficiency. By implementing the strategies outlined in this guide, teams can achieve:

- 3x faster response times for real-time applications

- 67% reduction in inference costs

- Improved throughput for high-concurrency workloads

Begin by benchmarking MTP on your specific workloads using the official benchmarking suite. For large-scale deployments, consider combining MTP with model parallelism techniques and continuous training pipelines to maintain performance gains as model complexity evolves.