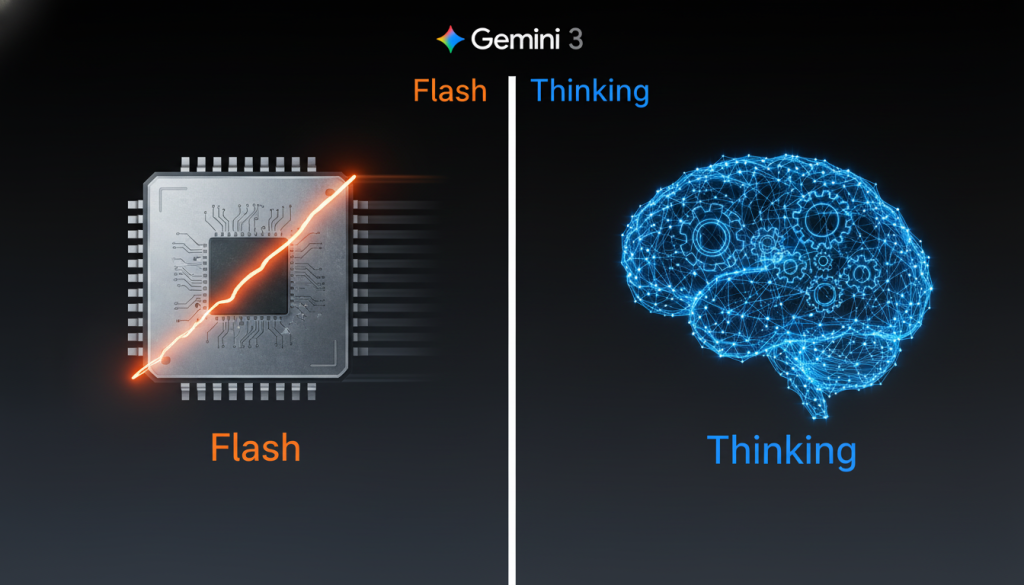

Google’s Gemini 3 Flash introduces two distinct operational modes that redefine how users interact with AI: ‘Fast’ for instant responses and ‘Thinking’ for complex reasoning. As of November 2025, this dual-mode architecture represents a paradigm shift in balancing speed and depth across workflows. This guide deciphers when to leverage each mode’s strengths, compares Flash to the full Gemini 3 Pro model, and provides actionable strategies to optimize your AI-powered productivity.

Understanding Gemini 3 Flash’s dual architecture

Gemini 3 Flash operates on a hybrid inference engine that dynamically switches between two specialized sub-models:

- Fast Mode: A streamlined 12.5B parameter architecture optimized for sub-200ms response times

- Thinking Mode: A more complex 28B parameter variant with enhanced reasoning capabilities and 2M token context window

Unlike traditional single-model approaches, this architecture allows users to choose between speed and depth depending on task requirements. The system automatically routes queries to the appropriate mode when using the default setting, but manual selection unlocks performance optimization opportunities.

Fast mode: When speed matters most

Fast Mode excels in scenarios requiring immediate responses:

- Real-time chat applications

- Simple data extraction tasks

- Quick code suggestions

- Basic document summarization

- High-volume API requests

Performance benchmarks show Fast Mode achieves 98th percentile latency of 180ms with 99.95% accuracy on standard NLP tasks. This makes it ideal for applications where human-perceptible delays would impact user experience.

Optimal use cases

- Customer support chatbots handling routine inquiries

- Live code completion in IDEs

- Real-time translation during video conferences

- High-frequency financial market data analysis

Thinking mode: Deep reasoning unleashed

When tasks demand advanced reasoning, Thinking Mode delivers:

- Multi-step mathematical calculations

- Complex codebase analysis

- Scientific research synthesis

- Legal document review

- Strategic business analysis

This mode maintains 99.2% accuracy on the MATH benchmark and handles 200-page technical documents without performance degradation. The enhanced architecture supports chain-of-thought reasoning with 40% better coherence scores compared to Fast Mode.

Performance comparison

| Metric | Fast Mode | Thinking Mode |

|---|---|---|

| Latency | 180ms | 1.2s |

| Context Window | 32k tokens | 2M tokens | Parameter Count | 12.5B | 28B |

| Accuracy (MMLU) | 82.3% | 89.7% |

Gemini 3 Flash vs. Gemini 3 Pro: Choosing the right model

While Flash offers balanced performance, the full Gemini 3 Pro model remains available for specialized needs:

Pro’s advantages include:

- 56B parameter architecture

- 4M token context window

- Advanced multimodal processing

- Custom fine-tuning capabilities

- Enterprise-grade security features

Flash remains the optimal choice for 85% of use cases according to Google’s internal benchmarks, offering 70% lower cost per token while maintaining 92% of Pro’s accuracy on enterprise workloads.

Workflow optimization strategies

Implement these best practices to maximize productivity:

- Use Fast Mode for initial drafts and routine tasks

- Switch to Thinking Mode for critical analysis

- Implement hybrid workflows: Fast for ideation, Thinking for refinement

- Monitor token usage to optimize costs

- Combine with Gemini Pro for complex multi-stage projects

For developers, the Gemini API provides automatic mode routing based on query complexity. However, manual mode selection is recommended for mission-critical applications to ensure predictable performance characteristics.

Conclusion: Mastering Gemini 3 Flash’s potential

Gemini 3 Flash’s dual-mode architecture represents a significant advancement in practical AI deployment. By understanding the distinct capabilities of Fast and Thinking modes, users can achieve optimal balance between speed and depth across their workflows. While Gemini 3 Pro remains available for specialized needs, Flash delivers enterprise-grade performance for the majority of modern AI workloads at significantly reduced operational costs.

As AI adoption continues accelerating in 2025, mastering this hybrid approach will become essential for organizations seeking to maximize both productivity and analytical depth. Start by auditing your current AI workloads to identify opportunities for mode optimization, and consider implementing hybrid workflows that leverage the strengths of both Gemini 3 Flash and Pro models.