Are you tired of manually creating weekly reports and exposing sensitive company data to cloud services? As of November 2025, organizations handling confidential business intelligence, financial data, or strategic planning face a critical challenge: how to automate reporting while maintaining complete data security. Traditional cloud-based AI solutions often require sending sensitive information to external servers, creating compliance risks and privacy concerns.

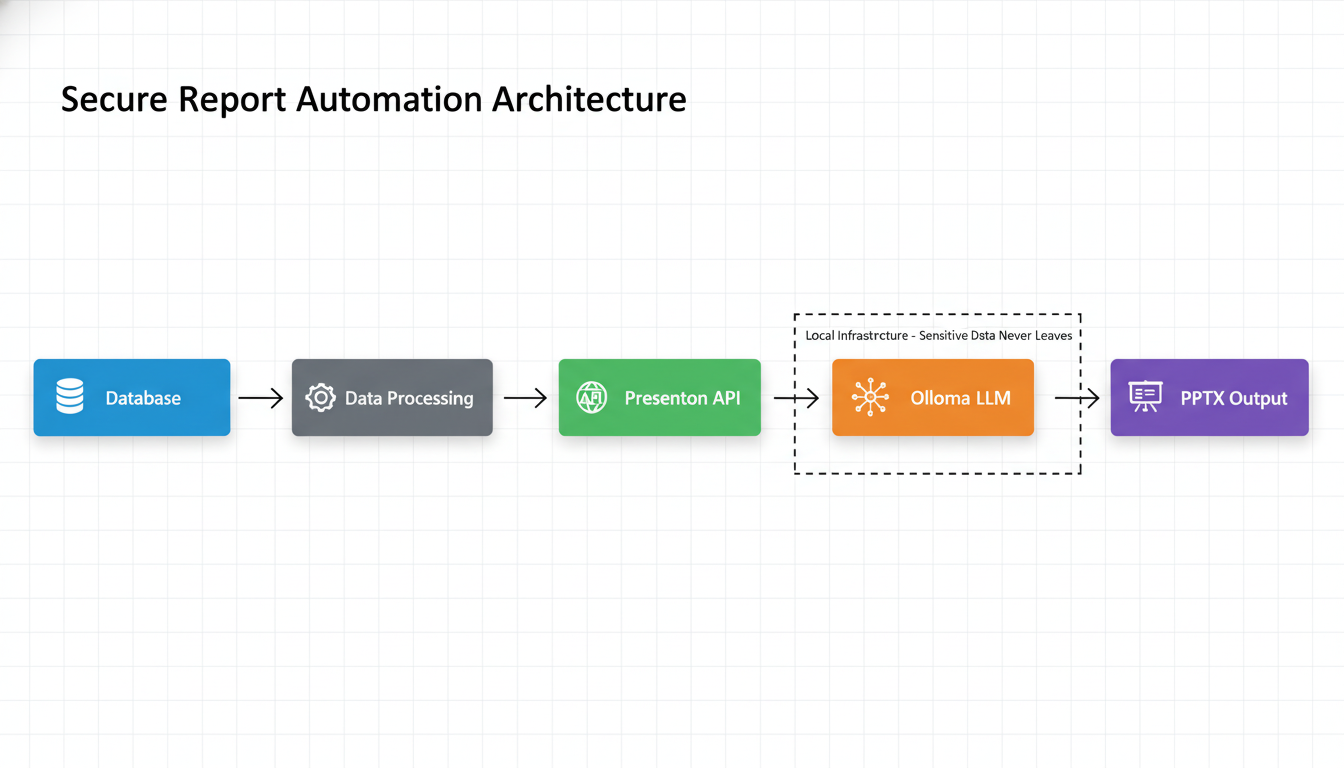

This comprehensive guide demonstrates how to use the Presenton API as a microservice to automate secure report generation directly from your database. We’ll walk through setting up Presenton with Ollama (version 0.13.4 as of November 2025) for local LLM processing, ensuring your company’s data stays protected within your infrastructure while generating professional PPTX files automatically.

Why local AI presentation generation matters

In 2025, the demand for automated reporting solutions has never been higher. According to recent industry analysis, organizations spend an average of 15-20 hours per week creating manual reports, with sensitive data exposure remaining a top concern. The Presenton API, combined with Ollama’s local LLM capabilities, addresses these challenges head-on by keeping your data processing entirely within your controlled environment.

Presenton (latest version 0.5.7 as of November 2025) provides an open-source AI presentation generator that can run locally on your infrastructure. When paired with Ollama (the Docker-like platform for local AI models), you create a powerful automation pipeline that eliminates external dependencies while maintaining professional presentation quality.

Setting up your secure automation infrastructure

The foundation of your automated reporting system begins with proper infrastructure setup. You’ll need Docker installed on your server or local machine, along with basic familiarity with command-line operations.

Prerequisites and system requirements

- Docker installed and running

- Minimum 8GB RAM (16GB recommended for larger models)

- Sufficient disk space for model downloads (3GB-70GB depending on model)

- Network access for initial setup (optional for offline operation)

- Database access credentials for your reporting data

Installing Presenton with Ollama integration

Start by deploying Presenton with Ollama support. The following command sets up a complete local presentation generation environment:

docker run -it --name presenton -p 5000:80 \

-e LLM="ollama" \

-e OLLAMA_MODEL="llama3.2:3b" \

-e PEXELS_API_KEY="your_pexels_api_key" \

-e CAN_CHANGE_KEYS="false" \

-v "./user_data:/app/user_data" \

ghcr.io/presenton/presenton:latestThis configuration automatically downloads and manages the specified Ollama model (llama3.2:3b in this example). The first run takes a few minutes for model download, but subsequent presentations generate quickly.

Choosing the right Ollama model for your needs

Ollama offers various models with different performance characteristics. As of November 2025, here are the recommended models for automated reporting:

| Model | Size | Use Case | Performance |

|---|---|---|---|

| llama3.2:3b | 2GB | Testing/Basic Reports | Fast, runs on most hardware |

| deepseek-r1:32b | 20GB | Production Reports | Good quality with charts |

| llama3.3:70b | 43GB | Enterprise Reporting | Highest quality, chart support |

| gemma3:1b | 815MB | Minimal Resources | Basic functionality only |

For most business reporting scenarios, we recommend starting with llama3.2:3b for testing, then upgrading to deepseek-r1:32b or llama3.3:70b for production use. The larger models offer better chart generation and more sophisticated content structuring.

Database integration and automated reporting workflow

The real power of this setup emerges when you integrate it with your existing database systems. Here’s how to create an automated reporting pipeline:

Step 1: Data extraction and preprocessing

Create a Python script that extracts data from your database and formats it for presentation generation:

import psycopg2

import requests

import json

def generate_weekly_report():

# Connect to your database

conn = psycopg2.connect(

host="localhost",

database="your_database",

user="your_user",

password="your_password"

)

# Extract weekly data

cursor = conn.cursor()

cursor.execute("""

SELECT metric_name, current_value, previous_value, growth_rate

FROM weekly_metrics

WHERE week_start = CURRENT_DATE - INTERVAL '7 days'

""")

data = cursor.fetchall()

# Format data for presentation

report_content = "Weekly Performance Report\n\n"

for row in data:

report_content += f"{row[0]}: {row[1]} (Change: {row[3]}%)\n"

return report_contentStep 2: Presentation generation via Presenton API

Use the Presenton API to automatically generate presentations from your data:

def generate_presentation_from_data(content):

api_url = "http://localhost:5000/api/v1/ppt/presentation/generate"

payload = {

"content": content,

"n_slides": 8,

"language": "English",

"template": "professional_blue",

"export_as": "pptx",

"tone": "professional",

"verbosity": "standard"

}

response = requests.post(api_url, json=payload)

if response.status_code == 200:

result = response.json()

return result['path']

else:

raise Exception(f"Presentation generation failed: {response.text}")Step 3: Automation and scheduling

Set up a cron job or scheduled task to run your reporting pipeline automatically:

# Weekly report generation (every Monday at 9 AM)

0 9 * * 1 /usr/bin/python3 /path/to/your/report_generator.pyAdvanced configuration for production use

For enterprise deployments, consider these advanced configurations to optimize performance and reliability.

GPU acceleration for faster generation

If you have NVIDIA GPUs available, enable GPU acceleration for significantly faster presentation generation:

docker run -it --name presenton --gpus=all -p 5000:80 \

-e LLM="ollama" \

-e OLLAMA_MODEL="llama3.3:70b" \

-e PEXELS_API_KEY="your_pexels_api_key" \

-e CAN_CHANGE_KEYS="false" \

-v "./user_data:/app/user_data" \

ghcr.io/presenton/presenton:latestExternal Ollama server configuration

For larger deployments, run Ollama on a dedicated server and connect multiple Presenton instances:

docker run -it --name presenton -p 5000:80 \

-e LLM="ollama" \

-e OLLAMA_MODEL="llama3.3:70b" \

-e OLLAMA_URL="http://your-ollama-server:11434" \

-e PEXELS_API_KEY="your_pexels_api_key" \

-e CAN_CHANGE_KEYS="false" \

-v "./user_data:/app/user_data" \

ghcr.io/presenton/presenton:latestSecurity considerations and best practices

Maintaining security is crucial when automating sensitive reporting processes. Follow these best practices:

- Network isolation: Run your entire setup in an isolated network segment

- Access controls: Implement proper authentication for database access

- Data encryption: Encrypt sensitive data at rest and in transit

- Regular updates: Keep Presenton and Ollama updated to latest versions

- Monitoring: Implement logging and monitoring for security events

Custom templates for brand consistency

Presenton supports custom templates to ensure your automated reports maintain brand consistency. Create templates using your company’s branding guidelines:

# Upload your company PPTX template

curl -X POST http://localhost:5000/api/v1/ppt/templates/upload \

-F "file=@company_template.pptx" \

-F "name=company_brand" \

-F "description=Company branded presentation template"Then specify your custom template in the generation request:

payload = {

"content": report_content,

"n_slides": 8,

"template": "company_brand",

"export_as": "pptx"

}Troubleshooting common issues

Here are solutions to common challenges you might encounter:

- Model download failures: Check network connectivity and disk space

- GPU not detected: Verify NVIDIA Container Toolkit installation

- Memory errors: Use smaller models or increase available RAM

- Slow generation: Enable GPU acceleration or optimize model selection

- API connection issues: Verify Presenton container is running on correct port

Performance optimization strategies

Optimize your setup for maximum efficiency:

- Model persistence: Add volume mounting to avoid re-downloading models

- Caching: Implement result caching for repetitive report types

- Batch processing: Generate multiple reports in parallel when possible

- Resource monitoring: Monitor CPU, memory, and GPU usage for optimization

Real-world use cases and benefits

Organizations implementing this solution report significant benefits:

- Time savings: Reduced report generation time from hours to minutes

- Cost reduction: Elimination of per-token API costs for cloud AI services

- Security compliance: Meeting strict data governance requirements

- Consistency: Automated brand compliance across all reports

- Scalability: Ability to generate hundreds of reports automatically

Future developments and roadmap

The Presenton development team continues to enhance the platform. According to their public roadmap for 2025-2026, upcoming features include:

- Enhanced SQL database integration for direct query-to-presentation workflows

- Advanced chart and visualization capabilities

- Multi-language support expansion

- Enterprise-grade deployment templates

- Enhanced MCP (Model Context Protocol) server capabilities

Getting started with your automated reporting system

Implementing automated secure reporting with Presenton and Ollama provides a robust solution for organizations prioritizing data security and operational efficiency. By keeping your data processing entirely within your infrastructure, you eliminate external dependencies while maintaining professional presentation quality.

Start with a simple proof-of-concept using the basic setup commands provided, then gradually expand to full production deployment as you refine your reporting workflows. The combination of Presenton’s flexible API and Ollama’s local AI capabilities creates a powerful foundation for secure, automated business intelligence reporting.

For detailed documentation and community support, visit the official Presenton documentation and join their active Discord community where developers share implementation experiences and best practices.