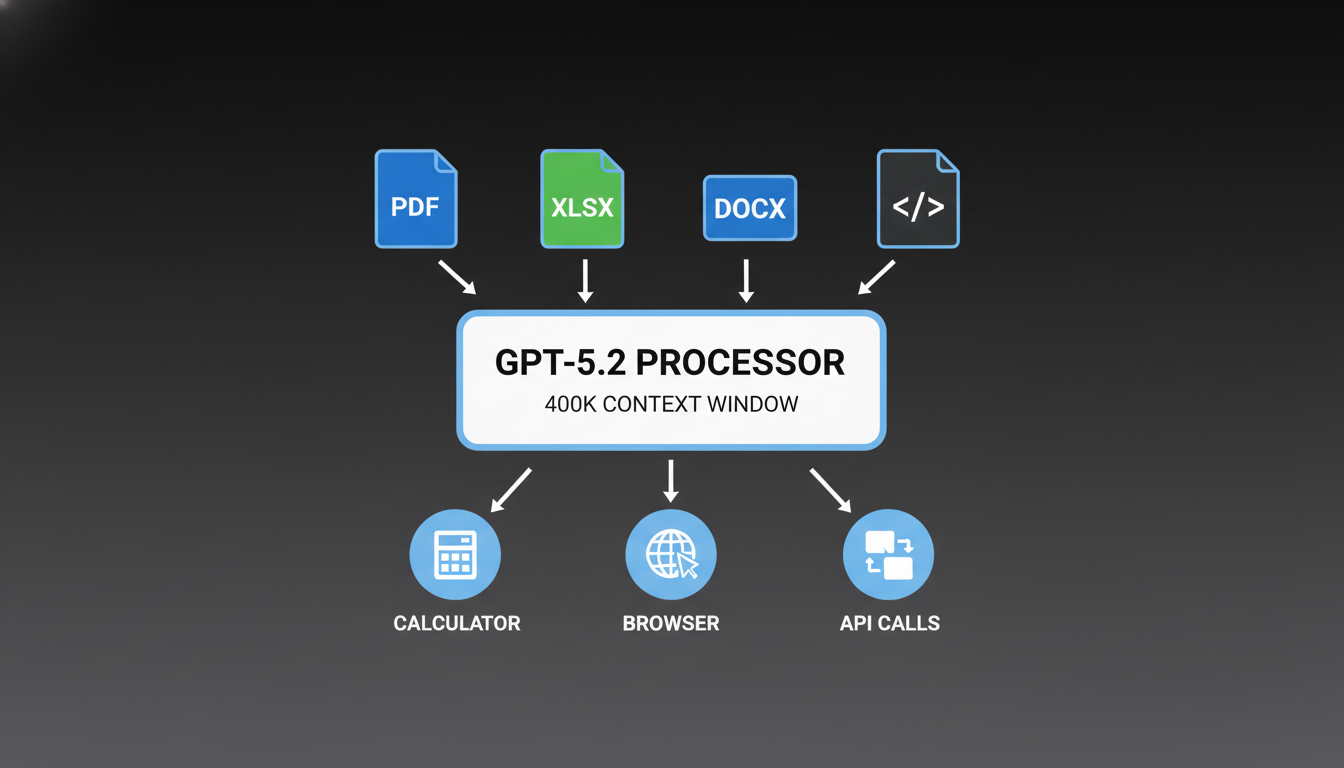

OpenAI’s GPT-5.2, released on December 11, 2025, represents a significant leap forward in AI capabilities with its massive 400,000-token context window and enhanced agentic tool-calling features. This latest model iteration delivers substantial improvements in professional knowledge work, coding, and complex workflow automation that developers and enterprises can leverage immediately.

What makes GPT-5.2 different

GPT-5.2 builds on the foundation of GPT-5.1 with several key improvements that make it particularly valuable for enterprise and developer use cases:

- 400k context window: The expanded context window allows processing of entire codebases, multi-chapter documents, and complex workflows in a single interaction

- Enhanced agentic tool-calling: Improved reliability in multi-step tool execution with better coordination across different systems

- Superior coding capabilities: Achieves 55.6% on SWE-Bench Pro and 80% on SWE-bench Verified, setting new standards for code generation and debugging

- Better vision understanding: Halves error rates on chart reasoning and software interface interpretation

- Reduced hallucination: 30% fewer errors compared to GPT-5.1 on enterprise queries

Practical applications of the 400k context window

The massive context window opens up several powerful use cases that were previously impractical:

Complete codebase analysis

GPT-5.2 can ingest entire software repositories, analyze dependencies, and suggest architectural improvements across hundreds of files simultaneously. This eliminates the need for chunking and stitching together partial analyses.

# Example: Analyzing a complete Python project

from openai import OpenAI

client = OpenAI()

response = client.responses.create(

model="gpt-5.2",

input=[

{

"role": "user",

"content": "Analyze this entire Python project structure and suggest improvements to code organization, performance bottlenecks, and security issues."

},

{

"role": "user",

"content": "Project files: [upload entire repository]"

}

],

max_output_tokens=4000

)Legal document review

Legal teams can now process entire contract portfolios, identifying inconsistencies, risks, and opportunities across multiple documents without losing context between sections.

Research paper synthesis

Researchers can upload dozens of academic papers and receive comprehensive literature reviews, identifying connections and contradictions across the entire corpus.

Implementing agentic tool-calling workflows

GPT-5.2’s improved tool-calling capabilities enable more reliable multi-step workflows. The model can coordinate across different tools and systems with better error handling and recovery.

# Example: Multi-step customer support workflow

from openai import OpenAI

client = OpenAI()

response = client.responses.create(

model="gpt-5.2",

input=[

{

"role": "user",

"content": "A customer reports a delayed flight, missed connection, overnight stay in New York, and a medical seating requirement. Handle the entire resolution process."

}

],

tools=[

{

"type": "function",

"function": {

"name": "search_customer_records",

"description": "Search customer booking and history",

"parameters": {

"type": "object",

"properties": {

"customer_id": {"type": "string"}

}

}

}

},

{

"type": "function",

"function": {

"name": "rebook_flight",

"description": "Find and book alternative flights",

"parameters": {

"type": "object",

"properties": {

"customer_id": {"type": "string"},

"new_itinerary": {"type": "string"}

}

}

}

},

{

"type": "function",

"function": {

"name": "process_compensation",

"description": "Initiate compensation for delays and inconveniences",

"parameters": {

"type": "object",

"properties": {

"customer_id": {"type": "string"},

"compensation_type": {"type": "string"}

}

}

}

}

],

parallel_tool_calls=True

)Best practices for tool-calling

- Use parallel tool calls: Enable independent operations to run simultaneously for better performance

- Implement verification steps: Add validation after critical operations to ensure success

- Handle errors gracefully: Design workflows with fallback options for failed tool calls

- Monitor context usage: Use the Responses API’s compaction feature for extended workflows

API integration and pricing

GPT-5.2 is available through OpenAI’s API with the following specifications:

| Model | Input tokens | Output tokens | Context window |

|---|---|---|---|

| GPT-5.2 | $1.75/M tokens | $14/M tokens | 400k tokens |

| GPT-5.2 Pro | $21-168/M tokens | Varies | 400k tokens |

| GPT-5.1 (previous) | $1.25/M tokens | $10/M tokens | 272k tokens |

The API supports the Responses endpoint with advanced features like compaction for extending effective context beyond the standard window:

# Using compaction for extended workflows

from openai import OpenAI

client = OpenAI()

# Initial response

response = client.responses.create(

model="gpt-5.2",

input="Analyze this 300k token document..."

)

# Compact for continuation

compacted = client.responses.compact(

model="gpt-5.2",

input=[response.output]

)

# Continue with compacted context

next_response = client.responses.create(

model="gpt-5.2",

input=[compacted.output],

previous_response_id=response.id

)Performance benchmarks

GPT-5.2 demonstrates significant improvements across key benchmarks:

| Benchmark | GPT-5.2 Thinking | GPT-5.1 Thinking | Improvement |

|---|---|---|---|

| GDPval (knowledge work) | 70.9% | 38.8% | 82.7% |

| SWE-Bench Pro (coding) | 55.6% | 50.8% | 9.4% |

| Long-context reasoning | 98.2% | 65.3% | 50.4% |

| Tool usage reliability | 98.7% | 95.6% | 3.2% |

Getting started with GPT-5.2

To begin using GPT-5.2 in your projects:

- Update your API client: Ensure you’re using the latest OpenAI SDK version

- Migrate prompts: Most GPT-5.1 prompts work seamlessly with GPT-5.2

- Set reasoning effort: Configure reasoning_effort parameter based on your use case requirements

- Test long-context workflows: Experiment with larger inputs to leverage the 400k context window

- Monitor performance: Track improvements in accuracy and efficiency

For developers building agentic systems, GPT-5.2 offers superior reliability in multi-step workflows and better coordination across tool calls. The expanded context window enables processing of entire codebases and document collections that were previously impractical.

Real-world implementation examples

Financial analysis workflow

Investment banks can use GPT-5.2 to analyze entire financial models, regulatory filings, and market research simultaneously:

# Financial modeling analysis

response = client.responses.create(

model="gpt-5.2",

input=[

{

"role": "user",

"content": "Analyze this three-statement financial model for a Fortune 500 company. Identify inconsistencies, calculate key ratios, and suggest improvements to formatting and citations."

},

{

"role": "user",

"content": "Financial model files: [upload Excel files, PDF reports, and supporting documentation]"

}

],

reasoning={"effort": "high"}

)Customer support automation

GPT-5.2 can handle complex customer issues requiring multiple system interactions and documentation review:

# Multi-system customer support

response = client.responses.create(

model="gpt-5.2",

input="Customer reports billing issue, service outage, and account access problems. Resolve end-to-end.",

tools=[

# CRM, billing, support ticket, and knowledge base tools

],

parallel_tool_calls=True,

max_tool_calls=10

)Conclusion

GPT-5.2’s 400k context window and enhanced agentic capabilities represent a significant advancement for developers and enterprises building complex AI systems. The ability to process entire codebases, legal documents, and multi-step workflows in a single interaction eliminates previous architectural limitations. Combined with improved tool-calling reliability and reduced hallucination rates, GPT-5.2 enables more sophisticated automation and analysis workflows than ever before.

As organizations continue to adopt AI for professional knowledge work, GPT-5.2 provides the foundation for building more reliable, comprehensive, and efficient AI-powered systems. The model is available now through OpenAI’s API and ChatGPT, with gradual rollout to paid subscribers over the coming weeks.