Google has revolutionized AI prompting with the launch of Gemini 3 in November 2025, introducing sophisticated new techniques that fundamentally change how developers interact with large language models. Unlike previous models that required complex prompt engineering to achieve optimal results, Gemini 3’s advanced reasoning capabilities enable more direct, structured interactions that yield more precise and powerful outcomes.

What makes Gemini 3 prompting different

Gemini 3 represents a significant departure from traditional AI prompting approaches. As Google’s official documentation states, “Gemini 3 is a reasoning model, which changes how you should prompt.” The model favors directness over persuasion and logic over verbosity, making it particularly effective for developers who need precise, reliable results.

According to Google’s Gemini 3 Developer Guide updated December 5, 2025, the model introduces several key innovations:

- Thinking levels instead of thinking budgets for more predictable reasoning control

- Thought signatures for maintaining reasoning context across API calls

- Structured outputs with tools combining JSON schema with built-in Google services

- Media resolution control for granular multimodal processing

Core prompting principles for Gemini 3

Based on extensive testing with Gemini 3 Pro since its November 18, 2025 release, developers have identified several core principles that maximize performance:

Precision over persuasion

Gemini 3 responds best to direct, clear instructions rather than elaborate explanations. The official Google documentation recommends: “Be concise in your input prompts. Gemini 3 responds best to direct, clear instructions. It may over-analyze verbose or overly complex prompt engineering techniques used for older models.”

Instead of persuasive language, use straightforward commands:

// Instead of: "I would really appreciate if you could..."

// Use: "Generate Python code for data analysis"

// Instead of: "Could you please explain this concept thoroughly?"

// Use: "Explain this in 3 bullet points"Consistent structure

Gemini 3 excels with structured prompts using either XML or Markdown formatting, but consistency is key. Choose one format and stick with it throughout your application to avoid confusing the model.

<rules>

1. Be objective

2. Cite sources

</rules>

<context>

[Your data here]

</context>

<task>

[Specific request]

</task>Advanced prompting techniques

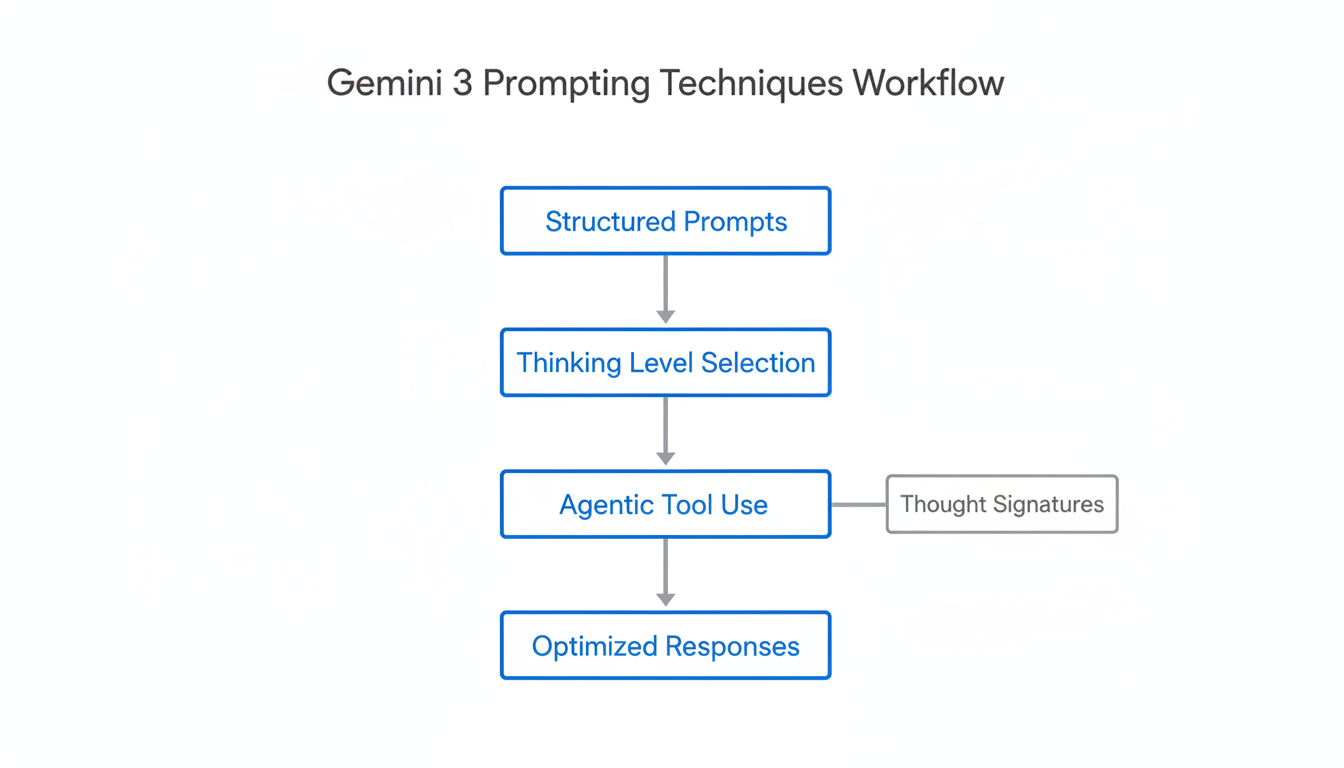

Thinking level control

Gemini 3 introduces the thinking_level parameter, replacing the numeric thinking budget system used in Gemini 2.5. This parameter controls the maximum depth of the model’s internal reasoning process before producing a response.

The available thinking levels as of December 2025 are:

| Thinking Level | Use Case | Performance Impact |

|---|---|---|

low | Simple instruction following, chat, high-throughput applications | Minimizes latency and cost |

high (Default) | Complex reasoning tasks requiring careful analysis | Maximizes reasoning depth, longer first token latency |

Implementation example:

from google import genai

from google.genai import types

client = genai.Client()

response = client.models.generate_content(

model="gemini-3-pro-preview",

contents="Analyze this complex codebase for security vulnerabilities",

config=types.GenerateContentConfig(

thinking_config=types.ThinkingConfig(thinking_level="high")

),

)Thought signatures for context persistence

One of Gemini 3’s most powerful features is thought signatures—encrypted representations of the model’s internal reasoning process. These signatures must be returned in subsequent API calls to maintain reasoning continuity.

For multi-step function calling scenarios:

// Model Response (contains signature)

{

"role": "model",

"parts": [

{

"functionCall": { "name": "check_flight", "args": {...} },

"thoughtSignature": "<Sig_A>" // MUST be returned

}

]

}

// Subsequent User Request (must include signature)

{

"role": "user",

"parts": [

{ "functionResponse": { "name": "check_flight", "response": {...} } }

]

},

{

"role": "model",

"parts": [

{ "functionCall": { "name": "check_flight", ... }, "thoughtSignature": "<Sig_A>" }

]

}Agentic tool use patterns

Gemini 3 excels at autonomous tool use when given proper directives. The persistence directive pattern ensures the model continues working until the task is complete:

You are an autonomous agent.

- Continue working until the user's query is COMPLETELY resolved.

- If a tool fails, analyze the error and try a different approach.

- Do NOT yield control back to the user until you have verified the solution.Structured outputs with built-in tools

Gemini 3 allows developers to combine structured JSON outputs with Google’s built-in tools like Search Grounding and URL Context. This enables powerful applications where the model can search for information and return structured data in a single API call.

from pydantic import BaseModel, Field

from typing import List

class MatchResult(BaseModel):

winner: str = Field(description="The name of the winner.")

final_match_score: str = Field(description="The final match score.")

scorers: List[str] = Field(description="The name of the scorer.")

response = client.models.generate_content(

model="gemini-3-pro-preview",

contents="Search for all details for the latest Euro.",

config={

"tools": [

{"google_search": {}},

{"url_context": {}}

],

"response_mime_type": "application/json",

"response_json_schema": MatchResult.model_json_schema(),

},

)Domain-specific prompting strategies

Coding and development

For programming tasks, Gemini 3 benefits from explicit decomposition:

Before providing the final code solution:

1. Analyze the requirements for edge cases

2. Identify optimal algorithms and data structures

3. Plan error handling strategy

4. Validate the approach before implementation

5. Include comprehensive commentsResearch and analysis

Research tasks require careful source management:

1. Decompose the topic into key research questions

2. Analyze provided sources independently for each question

3. Synthesize findings into a cohesive report

4. CITATION RULE: Every specific claim must be followed by [Source ID]

5. Flag unsupported claims as estimatesBest practices for production applications

When deploying Gemini 3 in production environments, consider these recommendations:

- Use thinking_level=”low” for high-throughput applications to minimize costs

- Always handle thought signatures in multi-turn conversations to maintain reasoning quality

- Set media_resolution appropriately based on content type (high for images, medium for documents)

- Avoid temperature adjustments – Gemini 3 performs best with the default setting of 1.0

- Place instructions at the end when working with large context windows

Migrating from Gemini 2.5 to Gemini 3

Developers migrating from Gemini 2.5 should note several key changes:

| Aspect | Gemini 2.5 | Gemini 3 |

|---|---|---|

| Reasoning Control | thinking_budget (numeric) | thinking_level (categorical) |

| Context Management | Standard prompting | Thought signatures required |

| Default Verbosity | More conversational | Direct and concise |

| Temperature Settings | Often tuned lower | Best at default 1.0 |

The migration process typically involves simplifying prompts and adopting the new thinking level parameter while ensuring proper handling of thought signatures for multi-turn interactions.

Conclusion

Gemini 3 represents a significant advancement in AI prompting capabilities, offering developers unprecedented control over reasoning processes and tool interactions. By adopting the structured prompting techniques outlined in Google’s official documentation and community best practices, developers can leverage Gemini 3’s full potential for building sophisticated AI applications.

The key takeaways for effective Gemini 3 prompting include prioritizing precision over persuasion, maintaining consistent structure, leveraging thinking levels appropriately, and properly handling thought signatures for multi-turn conversations. As Gemini 3 continues to evolve through 2025 and beyond, these foundational techniques will remain essential for developers seeking to build robust, production-ready AI applications.

For the latest updates and detailed technical specifications, refer to the official Gemini API documentation at ai.google.dev/gemini-api/docs/gemini-3, which Google maintains with current information as of December 2025.