As of December 2025, the economics of AI are brutal for many teams: every token you generate in the cloud costs money, adds latency, and raises privacy questions. Google’s Gemma 3 270M flips that equation by giving you a 270M-parameter, instruction-following model that runs in roughly 0.5–1 GB of RAM, supports a 32K-token context window, and is explicitly designed for on-device and Edge AI deployment.

This evergreen guide walks you through how to deploy Gemma 3 270M directly on-device for Edge AI: what the model actually offers, how to choose the right runtimes (MediaPipe LLM, LiteRT, GGUF/Ollama, etc.), and step-by-step examples for Android, iOS, and embedded systems. Along the way, we’ll connect the dots between low memory usage, 32K context, and real cost savings versus cloud-based AI.

Understanding Gemma 3 270M for Edge AI

Gemma 3 270M was introduced on August 14, 2025 as the smallest member of the Gemma 3 family. It has 270 million parameters (about 170M in embeddings and 100M in transformer blocks) and is released in both base and instruction-tuned variants, with Quantization-Aware Training (QAT) checkpoints for INT4 deployment. Internal Google tests showed an INT4-quantized build consuming roughly 0.75% of a Pixel 9 Pro battery for 25 conversations, making it their most power-efficient Gemma model to date.

Crucially for Edge AI, Gemma 3 270M and its 1B sibling are text-only but support a 32K-token context window per request. That’s enough to handle long prompts, multi-turn logs, or embedded documents directly on-device, while larger Gemma 3 models (4B, 12B, 27B) target 128K context on higher-end hardware.

| Model | Params | Context window | Typical use |

|---|---|---|---|

| Gemma 3 270M | 270M | 32K tokens | On-device SLM, Edge AI, task models |

| Gemma 3 1B | 1B | 32K tokens | Stronger on-device inference, small servers |

| Gemma 3 4B+ | 4B–27B | Up to 128K tokens | Workstations, cloud, multimodal |

The 270M model shines when you have:

- Strict memory budgets (e.g., <1 GB available) on mobile or embedded devices.

- High-volume but well-defined tasks like classification, extraction, routing, or template-style generation.

- Hard privacy constraints that make cloud calls undesirable or impossible.

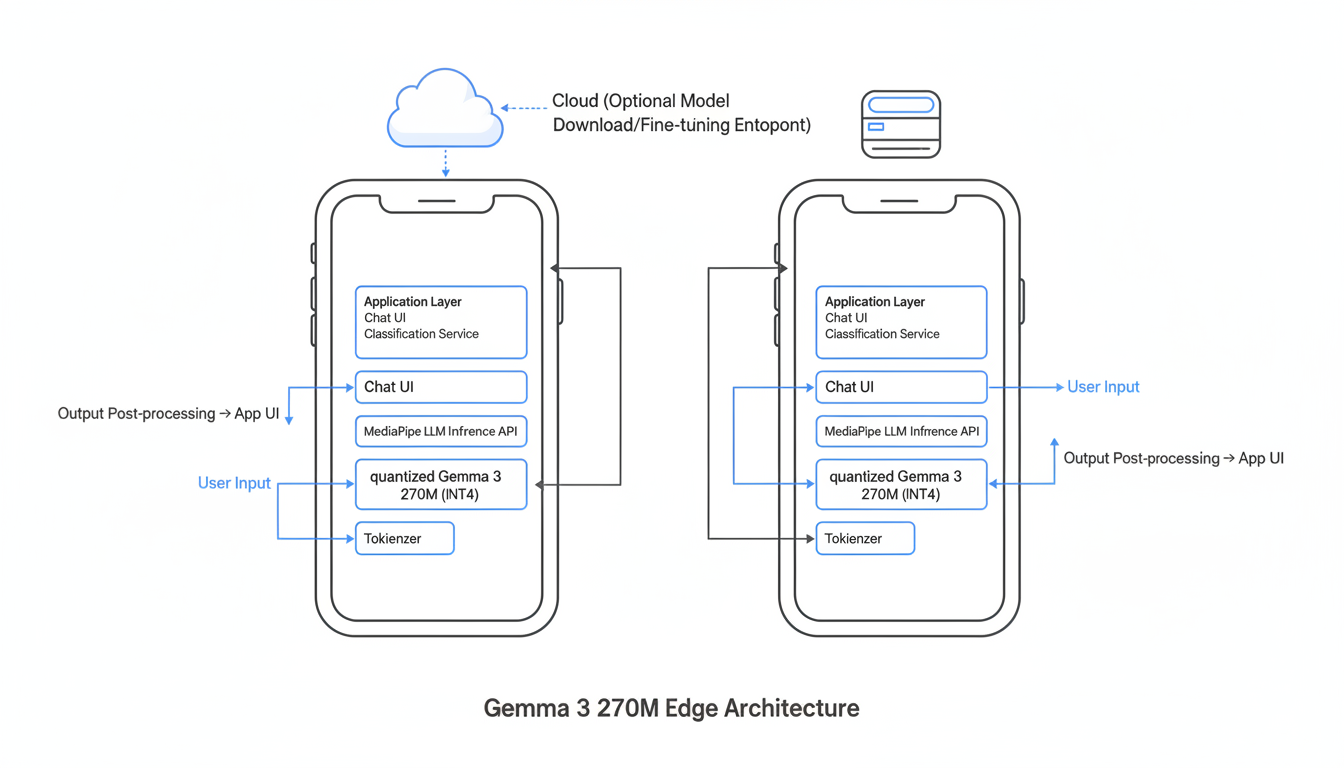

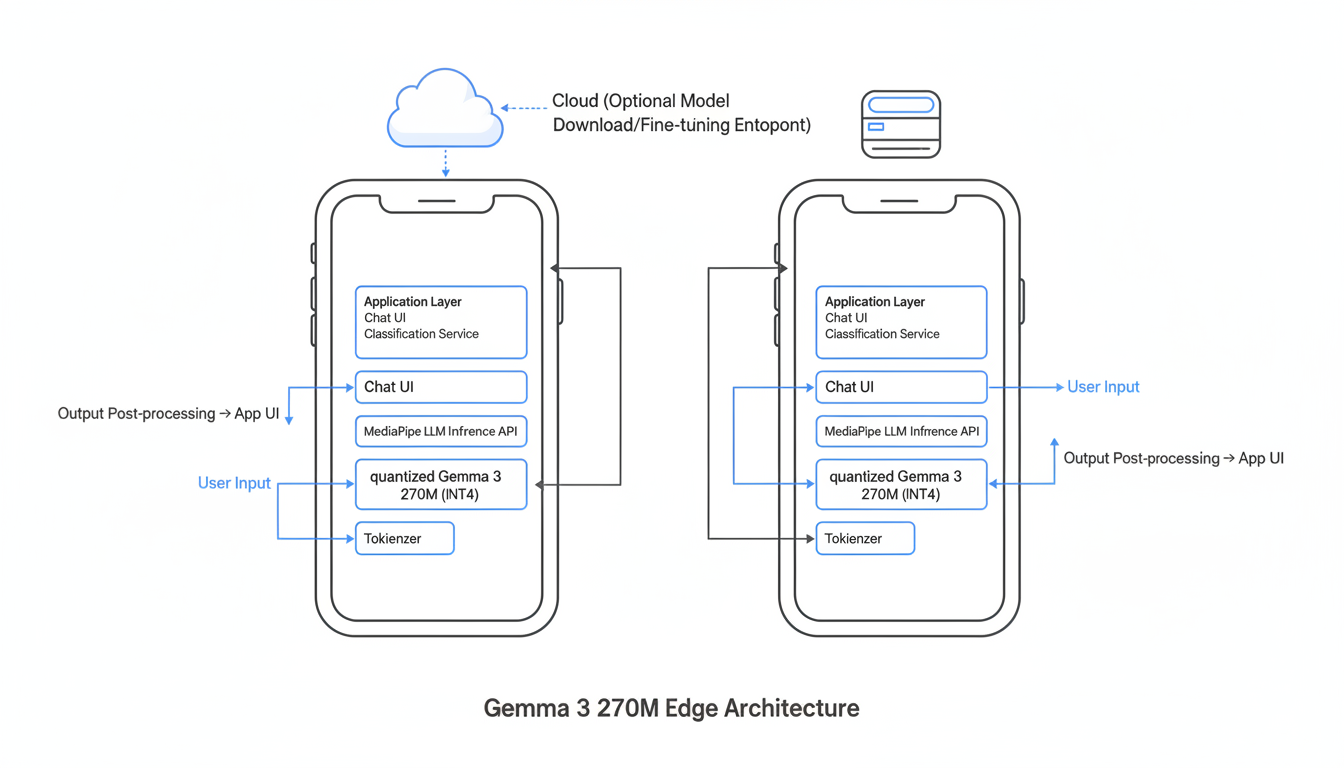

High-level on-device Gemma 3 270M architecture

At a high level, a production-ready on-device Gemma 3 270M stack looks like this:

- User input enters your app (chat UI, form, or background service).

- The text is tokenized locally.

- The quantized Gemma 3 270M model runs inference in an on-device runtime (MediaPipe LLM, LiteRT, GGUF, etc.).

- Tokens are decoded, optionally post-processed (parsing JSON, extracting entities), and rendered back in the app.

- No network calls are made at inference time; cloud is only used for optional fine-tuning or model downloads.

This pattern generalizes across Android, iOS, and embedded devices. The concrete tooling changes, but the key properties stay the same: on-device tokenizer, quantized Gemma 3 270M weights, and a thin runtime API wrapped by your application code.

Deployment paths: mobile, web, and embedded Edge

As of late 2025, you have several mature options for running Gemma 3 270M on-device:

- MediaPipe LLM Inference API for Android and iOS via LiteRT

.taskbundles. - Web / desktop via LiteRT and MediaPipe’s web integrations, or libraries like Transformers.js.

- Local runtimes such as Gemma.cpp, llama.cpp (GGUF builds), Ollama, LM Studio, and MLX (for Apple silicon).

For pure Edge AI (phones, tablets, low-power boxes), MediaPipe LLM + LiteRT is the most streamlined route, so we’ll focus on that first, then cover GGUF/Ollama for small servers and offline desktops.

MediaPipe LLM Inference + LiteRT overview

MediaPipe LLM Inference exposes a platform-agnostic API for LLMs on Android and iOS. You provide a LiteRT-optimized .task model file (converted from Hugging Face checkpoints), then call the API to generate text.

- Android: Java/Kotlin bindings via

TaskRunnerand the LLM Inference solution. - iOS: Swift bindings using the same LLM Inference API concepts.

- Debugging & demos: Google AI Edge Gallery app, which lets you import your custom

.taskmodels, tune sampling parameters, and test latency and quality on-device.

Step 1: Get Gemma 3 270M and choose a quantized format

First, download the model and decide how you’ll store it on-device. As of November 2025, Google publishes Gemma 3 270M in multiple places:

- Hugging Face: official

google/gemma-3-270mandgoogle/gemma-3-270m-itrepositories, plus QAT INT4 variants. - Kaggle Models: Keras and Gemma library friendly formats.

- LiteRT / MediaPipe-ready: community and Google-maintained LiteRT checkpoints (for example,

litert-community/gemma-3-270m-iton Hugging Face). - GGUF:

ggml-org/gemma-3-270m-GGUFfor llama.cpp, Ollama, and similar runtimes.

For Edge AI on mobile, your best starting points are:

- INT4 QAT checkpoints from Google, which preserve accuracy at 4-bit precision and dramatically cut RAM usage.

- LiteRT-converted models that can drop directly into MediaPipe LLM Inference, often shared via Hugging Face collections or notebooks like “Convert Gemma 3 270M to LiteRT for MediaPipe LLM Inference API”.

Step 2: Convert Gemma 3 270M to a LiteRT .task bundle

If you start from the raw Hugging Face weights, you’ll need to convert them into a LiteRT-compatible .task bundle. Google provides a Colab notebook (widely referenced in docs and GitHub issues) that automates:

- Downloading the Gemma 3 270M checkpoint from Hugging Face.

- Loading the model with the Gemma library or Transformers.

- Applying quantization (e.g., INT4) if not already quantized.

- Exporting to a LiteRT model plus a

.litertlmcompanion file. - Packaging those into a

.taskfile consumable by MediaPipe LLM Inference.

The resulting artifact set typically looks like:

gemma-3-270m-it-int4.task– main LiteRT Task bundle.gemma-3-270m-it-int4.litertlm– companion metadata file.

Store these in a CDN, app assets, or device storage. For mobile apps that need offline guarantees, bundling them as downloadable assets on first launch is often better than shipping them directly in the binary (to keep APK/IPA size reasonable).

Step 3: Deploy Gemma 3 270M on Android with MediaPipe LLM

On Android, the workflow is:

- Add MediaPipe LLM and LiteRT dependencies to your Gradle build.

- Place your

.task(and companion file) in app-accessible storage (assets,res/raw, or downloaded files directory). - Initialize the LLM Inference API with a path to the

.taskfile. - Stream or batch-generate tokens using your prompt, then render them in the UI.

// Kotlin pseudo-code for initializing Gemma 3 270M with MediaPipe LLM

val taskPath = copyTaskFromAssetsToFilesDir("gemma-3-270m-it-int4.task")

val options = LlmInferenceOptions.builder()

.setModelPath(taskPath)

.setMaxTokens(256) // control latency

.setTemperature(0.3f) // more deterministic

.build()

val llm = LlmInference.createFromFile(options)

// Simple text generation

val request = GenerateTextRequest.builder()

.setPrompt("Classify the following ticket by priority: ...")

.build()

llm.generateTextAsync(request) { result ->

val output = result.generatedText

runOnUiThread {

outputTextView.text = output

}

}Gemma 3 270M’s small footprint means you can target mid-range Android phones with 4–6 GB RAM comfortably, especially with INT4 quantization. Make sure to:

- Restrict

maxTokensfor low-latency interactive UIs. - Use streaming generation where supported to improve perceived speed.

- Run heavy inference on a background coroutine or worker thread.

Step 4: Deploy Gemma 3 270M on iOS

On iOS, you use the same .task bundle but different bindings. The MediaPipe LLM Inference API exposes a Swift interface where you provide the model path and a prompt, then receive generated tokens.

// Swift-style pseudo-code for using Gemma 3 270M via MediaPipe LLM

let taskPath = Bundle.main.path(forResource: "gemma-3-270m-it-int4", ofType: "task")!

let options = LlmInferenceOptions()

options.modelPath = taskPath

options.maxTokens = 256

options.temperature = 0.3

let llm = try LlmInference(options: options)

let request = GenerateTextRequest(prompt: "Summarize this incident log in 3 bullet points:")

llm.generateText(request: request) { result, error in

if let text = result?.generatedText {

DispatchQueue.main.async {

self.outputLabel.text = text

}

}

}Because the model runs entirely on-device, you can safely process sensitive logs, health notes, or customer data without sending it to a server, which is a major selling point for regulated industries building native apps.

Step 5: Using Gemma 3 270M on small servers and gateways

For edge gateways, on-prem servers, or developer desktops where you still want to avoid cloud inference, GGUF builds and local runtimes are often simpler to wire up than MediaPipe:

- llama.cpp / Gemma.cpp for C++/CLI integration and embedded deployments.

- Ollama for easy local HTTP APIs and dev tooling.

- LM Studio and MLX for interactive testing on laptops and Apple silicon.

# Example: run Gemma 3 270M locally with Ollama

ollama pull gemma3:270m

# Simple one-off generation

ollama run gemma3:270m "Extract key entities from this log as JSON: ..."You can then front this local runtime from your own API, or call it directly from microservices running at the edge. The 32K context is especially useful for ingesting entire IoT logs or device traces in one shot, turning a low-cost box into a smart “edge analyst.”

Designing edge-native prompts and tasks

Gemma 3 270M is not meant to replace giant cloud LLMs for open-ended conversation. It excels when you constrain the problem. To get the most out of it on-device:

- Use structured outputs like JSON for routing, extraction, and classification, then validate locally.

- Exploit the 32K context by inlining relevant logs, documents, or configuration snippets instead of doing extra network calls.

- Combine with deterministic code: let Gemma 3 270M propose structured data; have traditional code enforce rules, thresholds, and policies.

// Example prompt for structured extraction on-device

You are a strict JSON API. Given a support ticket, return:

{

"priority": "low|medium|high|critical",

"category": "billing|technical|account|other",

"summary": "short one-line summary"

}

Ticket:

<paste full ticket text here>Performance, cost, and privacy trade-offs

Compared with cloud-hosted LLMs, deploying Gemma 3 270M on-device changes your trade-offs in three big ways:

- Cost: Once you’ve shipped the app or device, inference is effectively free. There are no per-token API charges, which is huge for high-volume workloads like call-center tools or large fleets of IoT devices.

- Latency: You eliminate network round-trips and can often hit sub-100 ms first-token latency on modern mobile chips, especially with INT4 QAT models.

- Privacy: Sensitive data never leaves the device, which dramatically simplifies compliance for many use cases.

The main limitation is that 270M parameters are not magic: for open-ended reasoning or very complex multi-step tasks, you may still prefer to use larger models in the cloud, or combine Gemma 3 270M with a fallback cloud model only when strictly needed.

Real-world Edge AI patterns with Gemma 3 270M

Some concrete architectures you can build today:

- On-device moderation: Fine-tune Gemma 3 270M on your policy labels, deploy via MediaPipe LLM, and moderate user-generated content directly on-device before it’s even sent to the backend.

- Field-service assistants: Keep repair manuals and recent logs in the 32K context window; have the model summarize probable issues or generate checklists offline on a rugged tablet.

- Edge routing and triage: Use Gemma 3 270M at gateways to classify events and decide whether they’re worth sending to more expensive cloud processors, cutting bandwidth and cloud bills.

- Private creative tools: Story generators or email helpers that run fully offline using the instruction-tuned checkpoint.

Conclusion: turning Gemma 3 270M into your Edge AI workhorse

Gemma 3 270M is not just “a tiny model” from Google; it’s a deliberate attempt to make serious instruction-following AI practical on everyday devices. With around 0.5 GB of RAM usage, a 32K context window, and production-ready INT4 QAT checkpoints, it’s uniquely positioned to break your dependency on cloud inference for many workloads.

To deploy it effectively for Edge AI, keep these steps in mind: start from the official checkpoints, convert to LiteRT .task bundles for mobile or GGUF for local runtimes, integrate via MediaPipe LLM or Ollama-style APIs, and design prompts around narrow, structured tasks. Do that, and you get fast, private, and cost-effective on-device AI that scales with your install base, not your cloud bill.