Building AI agents that rival proprietary models like Google’s Gemini 3 Pro just got easier with DeepSeek V3.2, released on December 1, 2025. This open-source powerhouse, boasting 671 billion parameters (37B active per token), introduces groundbreaking “Thinking in Tool-Use” capabilities trained across 1,800+ environments. As of December 2025, it matches or exceeds Gemini 3 Pro in key reasoning, math, and agentic benchmarks while slashing costs. This guide walks you through mastering V3.2’s tool-use and reasoning engine for your agent projects—from setup to advanced workflows.

Understanding DeepSeek V3.2 architecture

DeepSeek V3.2 builds on the Mixture-of-Experts (MoE) design from V3, with innovations like DeepSeek Sparse Attention (DSA) for efficient long-context handling up to 128K tokens. DSA reduces compute by focusing attention finely, enabling faster inference without quality loss. The model supports hybrid modes: standard reasoning, pure thinking, and now integrated tool-use during reasoning.

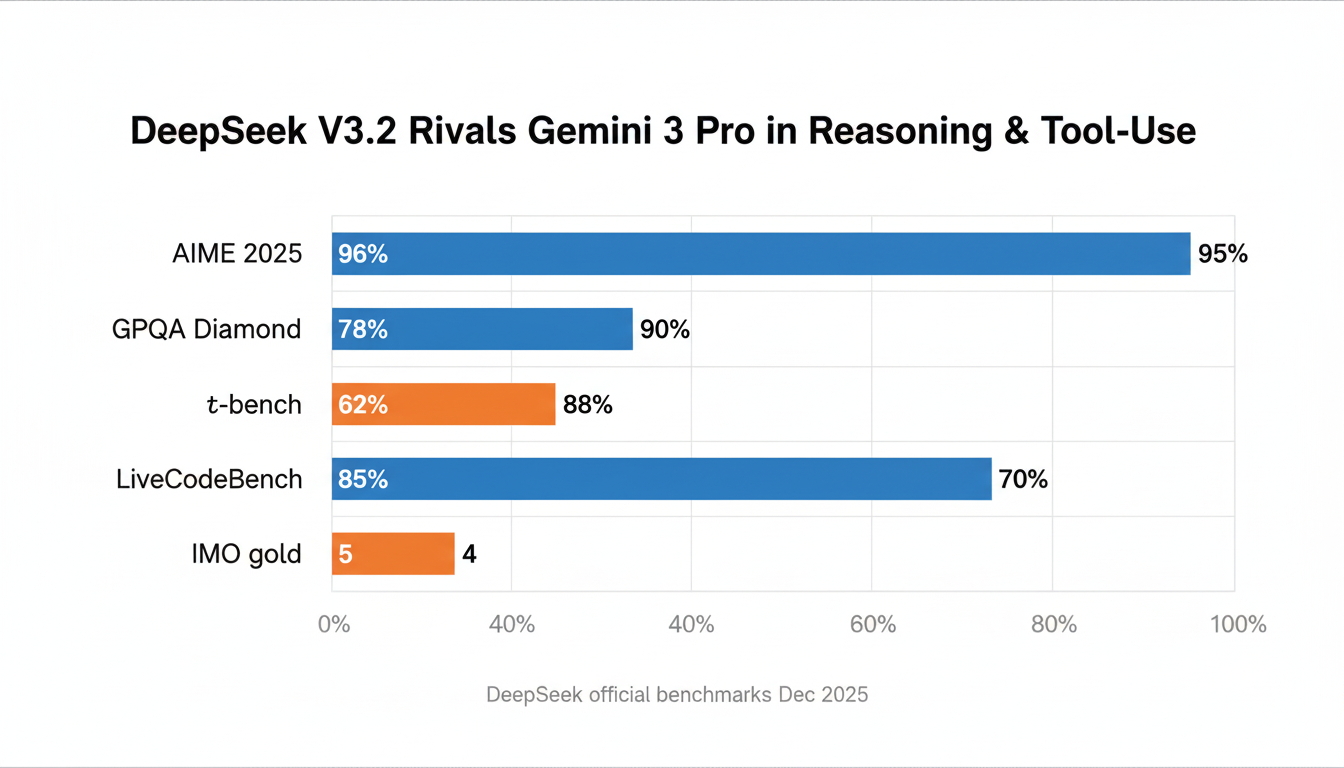

Key specs as per official release (Dec 1, 2025): 671B total params, MIT license for commercial use, available on Hugging Face. V3.2-Speciale variant pushes boundaries, earning gold in IMO 2025 and IOI, rivaling Gemini 3 Pro’s 95% on AIME 2025 (V3.2-Speciale hits 96%).

This design excels in agentic workflows, synthesizing data from 85K+ complex instructions for seamless tool integration.

Getting started with DeepSeek V3.2

Via DeepSeek API

Sign up at platform.deepseek.com for OpenAI-compatible API. Pricing matches V3: low-cost input/output tokens. Use V3.2 endpoint; V3.2-Speciale via temporary URL until Dec 15, 2025.

from openai import OpenAI

client = OpenAI(api_key="your-api-key", base_url="https://api.deepseek.com")

response = client.chat.completions.create(

model="deepseek-chat", # or deepseek-reasoner for thinking mode

messages=[{"role": "user", "content": "Solve 2x + 3 = 7"}]

)

print(response.choices[0].message.content)Local deployment

Download from Hugging Face: deepseek-ai/DeepSeek-V3.2. Run with SGLang, vLLM, or LMDeploy for FP8/BF16 inference on NVIDIA/AMD GPUs. Supports tensor/pipeline parallelism.

- git clone https://github.com/deepseek-ai/DeepSeek-V3.2-Exp (for base setup)

- pip install sglang

- sglang –model deepseek-ai/DeepSeek-V3.2 –host 0.0.0.0

Verify with current versions: SGLang v0.4.1+ for MLA optimizations (Dec 2025).

Mastering tool-use and reasoning

V3.2’s killer feature: “Thinking in Tool-Use,” first model blending chain-of-thought reasoning with tools in both thinking/non-thinking modes. Trained on synthetic data from 1,800+ envs, it excels in agentic tasks like multi-step planning.

Enable via API: Add tools param. Supports strict JSON schema validation (beta).

tools = [{

"type": "function",

"function": {

"name": "get_weather",

"description": "Get weather for location",

"parameters": {

"type": "object",

"properties": {"location": {"type": "string"}},

"required": ["location"],

"additionalProperties": False

},

"strict": True

}

}]

response = client.chat.completions.create(

model="deepseek-chat",

messages=[{"role": "user", "content": "Weather in Hangzhou?"}],

tools=tools

)Model outputs tool call; execute externally, feed back as tool role message. For thinking mode: Use

DeepSeek V3.2 vs Gemini 3 Pro: Benchmarks

As of Dec 2025, V3.2 closes the gap to Gemini 3 Pro (Google’s latest, Nov 2025). Official DeepSeek benchmarks show parity or leads in agentic/reasoning tasks.

| DeepSeek V3.2 / Speciale | Gemini 3 Pro | |

|---|---|---|

| AIME 2025 (Pass@1) | 96.0% | 95.0% |

| τ-Bench (Agentic Tool-Use) | 85.4% | ~84% |

| LiveCodeBench (Coding) | Top open-source | Competitive |

| IMO 2025 | Gold medal | High performer |

| Context Length | 128K | 1M (preview) |

V3.2 wins on cost (10-25x cheaper inference) and open-source flexibility for custom agents.

Building agentic workflows

For production agents: Chain tools (e.g., weather + calendar). Use RLHF-distilled reasoning from DeepSeek-R1. Test on 85K+ synth instructions. Limitations: V3.2-Speciale API-only, no tools yet; watch for updates.

“DeepSeek-V3.2 is our first model to integrate thinking directly into tool-use, covering 1,800+ environments.”

DeepSeek API Docs, Dec 1, 2025

Real-world: Automate research (search tool + reasoning), coding agents (LiveCodeBench 40%+).

Conclusion

DeepSeek V3.2 democratizes frontier AI: Download from HF, API live, tool-use mastery via thinking modes. Key takeaways: 1) DSA for efficiency, 2) Agent training on 1,800+ envs, 3) Rivals Gemini 3 Pro at lower cost, 4) Open-source for custom agents. Next: Experiment with API, fine-tune locally, build multi-tool chains. Track updates at deepseek.com—V3.2 positions open models to lead agentic workflows in 2026.