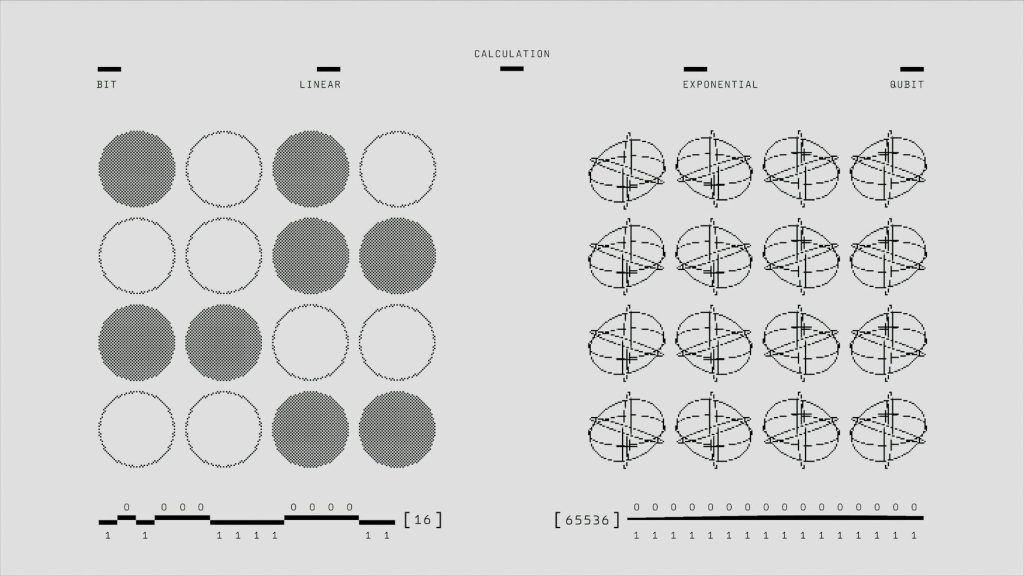

The landscape of large language models (LLMs) is evolving at an unprecedented pace, with each major player pushing the boundaries of what artificial intelligence can achieve. For developers, researchers, and businesses, understanding the nuances of these models—particularly how they respond to prompts—is crucial for extracting maximum value. As of November 2025, the leading models from OpenAI, Google, Meta, and Anthropic offer distinct capabilities and strengths that shape their optimal use cases. This article delves into a comprehensive comparison of prompting strategies and inherent characteristics across GPT-5.1, Gemini 2.5 Pro, Llama 4 (Scout & Maverick), and Claude Sonnet 4.5, providing insights into leveraging their unique architectures for superior results.

OpenAI’s GPT: advanced reasoning and personalization

OpenAI continues to innovate with its Generative Pre-trained Transformer (GPT) series, most recently with the release of GPT-5 (August 7, 2025) and its iterative update, GPT-5.1 (November 12, 2025). GPT-5.1 introduces two key variants: GPT-5.1 Instant and GPT-5.1 Thinking. The “Instant” model is designed for conversational fluency, exhibiting a warmer default tone and improved instruction following. It’s built to surprise users with playfulness while remaining highly useful.

GPT-5.1 Thinking, conversely, is OpenAI’s advanced reasoning model. It dynamically adjusts its “thinking time” based on prompt complexity, providing more thorough answers for difficult requests and quicker responses for simpler ones. This adaptive reasoning, a notable advancement, allows the model to “think before responding,” leading to more accurate results in complex tasks like math and coding, as evidenced by significant improvements on benchmarks like AIME 2025 and Codeforces.

# Example of a complex prompt for GPT-5.1 Thinking

# Requesting multi-step problem solving

prompt = """

Analyze the following Python codebase, identify potential security vulnerabilities related to SQL injection and cross-site scripting (XSS) in user input handling, and propose refactored code snippets to mitigate these risks.

Focus on the `user_auth.py` and `data_processing.py` files.

import sqlite3

from flask import Flask, request, jsonify

app = Flask(__name__)

DATABASE = 'users.db'

def get_db():

conn = sqlite3.connect(DATABASE)

conn.row_factory = sqlite3.Row

return conn

@app.route('/login', methods=['POST'])

def login():

username = request.json['username']

password = request.json['password']

conn = get_db()

cursor = conn.cursor()

# Vulnerable to SQL injection

cursor.execute(f"SELECT * FROM users WHERE username = '{username}' AND password = '{password}'")

user = cursor.fetchone()

conn.close()

if user:

return jsonify({"message": "Login successful", "user": dict(user)}), 200

return jsonify({"message": "Invalid credentials"}), 401

from flask import Flask, request, render_template_string

app = Flask(__name__)

@app.route('/display_comment', methods=['GET'])

def display_comment():

comment = request.args.get('comment', '')

# Vulnerable to XSS

html_template = f"<h1>Your Comment</h1><p>{comment}</p>"

return render_template_string(html_template)

Provide:

1. A detailed explanation of each vulnerability found.

2. The specific lines of code where the vulnerabilities exist.

3. Refactored code snippets using parameterized queries for SQL injection and proper HTML escaping for XSS.

4. A brief explanation of why the refactored code mitigates the risks.

"""

# In a real scenario, this prompt would be sent to the GPT-5.1 Thinking API.

# The model would analyze and provide the detailed, structured response.

GPT-5.1 also emphasizes user customization, offering refined presets for tone (e.g., Professional, Friendly, Candid, Quirky) and experimental granular controls for conciseness, warmth, and emoji usage. This level of steerability is critical for applications requiring specific communication styles or brand voices. OpenAI has also focused on safety, significantly reducing hallucinations and sycophancy, aiming for a more reliable and honest AI interaction.

Google’s Gemini: multimodal intelligence and scalable efficiency

Google’s Gemini family of models, particularly the Gemini 2.5 series (with Gemini 2.5 Pro updated June 2025, and Flash/Flash-Lite updated in June and July 2025 respectively), showcases Google’s push for multimodal capabilities and optimized performance. Gemini 2.5 Pro stands out as their most advanced “thinking model,” designed for complex problems in coding, math, and STEM. It excels at analyzing large datasets and codebases, leveraging an impressive input token limit of 1 million (for `gemini-2.5-pro`).

A significant strength of Gemini 2.5 Pro is its native multimodality, enabling it to process and understand inputs across text, audio, images, and video. This makes it highly versatile for applications requiring rich contextual understanding from diverse data sources. For instance, it can generate code from image descriptions or interpret video content to answer questions. Its “thinking” capability allows it to reason through problems before responding, enhancing the quality of its output for intricate tasks.

# Example prompt for Gemini 2.5 Pro for multimodal analysis

# Assuming an image of a complex circuit diagram is provided

# And a text description of a fault symptom

prompt_text = """

Analyze the provided circuit diagram (image input). The text input describes a symptom: "The voltage across R2 is unexpectedly low."

Based on the diagram and symptom, identify the most probable component failure or wiring issue that would cause this symptom.

Explain your reasoning step-by-step, referencing specific components in the diagram.

"""

# In an actual API call, the image would be passed as part of the multimodal input.

# Gemini 2.5 Pro would then combine visual and textual understanding to diagnose the issue.

Gemini 2.5 Flash and Flash-Lite models are optimized for speed and cost-efficiency, suitable for high-volume, low-latency tasks. Flash is well-rounded for agentic use cases and large-scale processing, while Flash-Lite focuses on ultra-fast responses and throughput. These models, with a knowledge cutoff of January 2025, offer robust function calling, structured outputs, and the ability to be grounded with tools like Google Maps and Google Search, making them powerful for building interactive and data-aware AI applications.

Meta’s Llama: open-weight multimodality and context mastery

Meta’s Llama series, particularly Llama 4 Scout and Llama 4 Maverick (released April 5, 2025), represents Meta’s commitment to open-weight multimodal AI. These models are the first in the Llama family to natively support multimodality and utilize a Mixture-of-Experts (MoE) architecture, providing a balance of performance and efficiency.

Llama 4 Scout, a 17 billion active parameter model, boasts an industry-leading context window of 10 million tokens. This massive context length is a game-changer for tasks involving extensive documentation, multi-document summarization, or reasoning over vast codebases. It enables the model to maintain coherence and draw insights from incredibly long inputs, a crucial feature for enterprise-level applications and complex research.

# Example prompt for Llama 4 Scout for long-context summarization

prompt = """

Summarize the key findings, methodologies, and conclusions from the following five research papers on climate change impacts on biodiversity.

Ensure your summary synthesizes information across all papers, highlighting any conflicting or reinforcing data points.

Provide specific examples of species and regions mentioned in each paper.

[Paper 1 Content - ~2 million tokens]

[Paper 2 Content - ~1.8 million tokens]

[Paper 3 Content - ~2.5 million tokens]

[Paper 4 Content - ~1.5 million tokens]

[Paper 5 Content - ~2.2 million tokens]

"""

# Llama 4 Scout's 10M token context window allows processing such extensive input.

Llama 4 Maverick, also with 17 billion active parameters but utilizing 128 experts, focuses on delivering unparalleled performance in image and text understanding at a competitive price point. It is designed as a product workhorse for general assistant and chat use cases, excelling in precise image understanding and creative writing. Both models benefit from distillation from Llama 4 Behemoth, a powerful 288 billion active parameter teacher model currently still in training, which informs their advanced capabilities.

Meta has also made significant strides in addressing model bias, with Llama 4 showing reduced refusal rates on debated political and social topics and a more balanced perspective compared to previous versions. This commitment to fairness and neutrality is vital for broad application and public trust.

Anthropic’s Claude sonnet: agentic capabilities and precision

Anthropic’s Claude Sonnet 4.5, released on September 29, 2025, has positioned itself as a top-tier model for agentic workloads, coding, and computer use. It boasts superior instruction following, tool selection, error correction, and advanced reasoning, making it ideal for building complex AI agents and automating intricate workflows.

Sonnet 4.5’s strengths lie in its ability to produce accurate and detailed responses for long-running tasks, with enhanced domain knowledge in areas like coding, finance, and cybersecurity. It excels at the entire software development lifecycle, from planning to debugging and refactoring, and can generate up to 64,000 output tokens, which is particularly useful for rich code generation.

# Example prompt for Claude Sonnet 4.5 for agentic coding

prompt = """

As an AI agent, your goal is to refactor the provided JavaScript frontend application to improve its performance and maintainability.

Specifically:

1. Identify opportunities to optimize DOM manipulation.

2. Suggest using a modern framework (e.g., React or Vue.js) for component-based architecture, and provide a small example of a refactored component.

3. Ensure all user input fields are properly sanitized to prevent XSS.

<!DOCTYPE html>

<html>

<head>

<title>Legacy App</title>

</head>

<body>

<div id="app"></div>

<script src="app.js"></script>

</body>

</html>

document.addEventListener('DOMContentLoaded', () => {

const appDiv = document.getElementById('app');

let items = ['Item 1', 'Item 2'];

function renderList() {

appDiv.innerHTML = ''; // Heavy DOM manipulation

const ul = document.createElement('ul');

items.forEach(itemText => {

const li = document.createElement('li');

li.textContent = itemText;

ul.appendChild(li);

});

appDiv.appendChild(ul);

const input = document.createElement('input');

input.type = 'text';

input.id = 'newItem';

appDiv.appendChild(input);

const addButton = document.createElement('button');

addButton.textContent = 'Add Item';

addButton.onclick = () => {

const newItemText = document.getElementById('newItem').value;

items.push(newItemText);

renderList(); // Re-renders entire list

};

appDiv.appendChild(addButton);

}

renderList();

});

Provide the refactored `app.js` using React, and explain the optimizations.

"""

# Claude Sonnet 4.5, with its agentic capabilities, would demonstrate robust planning and execution for this task.

For computer use, Sonnet 4.5 demonstrates leadership on benchmarks like OSWorld, indicating its prowess in handling browser-based tasks from competitive analysis to procurement workflows. Its ability to offer near-instant responses or engage in “extended thinking” provides flexibility, allowing users to prioritize speed or depth based on the task at hand. Anthropic also emphasizes safety, with Sonnet 4.5 being their most aligned frontier model, demonstrating significant improvements in reducing sycophancy, deception, and power-seeking behaviors, alongside strong defenses against prompt injection attacks.

Comparative analysis: prompting across the frontier

When comparing these cutting-edge models, it’s clear that while they all aim for advanced general intelligence, their specific strengths and ideal prompting strategies diverge. The choice of model often depends on the task’s primary requirements: raw computational power for complex problem-solving, seamless multimodal understanding, extensive context processing, or highly reliable agentic execution.

| Feature/Model | OpenAI GPT-5.1 | Google Gemini 2.5 Pro | Meta Llama 4 (Scout/Maverick) | Anthropic Claude Sonnet 4.5 |

|---|---|---|---|---|

| Latest Version (Date) | GPT-5.1 (Nov 2025) | Gemini 2.5 Pro (June 2025) | Llama 4 (Apr 2025) | Sonnet 4.5 (Sep 2025) |

| Primary Strength | Adaptive reasoning, personalized conversational style, coding, health | Multimodal understanding, complex problem solving, coding, large datasets | Open-weight, long-context (Scout), efficient multimodal (Maverick), bias reduction | Agentic capabilities, coding, computer use, financial analysis, cybersecurity, precision |

| Key Feature/Capability | GPT-5.1 Instant (conversational), GPT-5.1 Thinking (adaptive reasoning), reduced hallucinations/sycophancy | Native multimodality (text, audio, image, video), 1M token input, thinking model, function calling | 10M token context (Scout), MoE architecture, improved visual encoder, reduced bias | Superior instruction following, tool selection, error correction, 64K output tokens, extended thinking |

| Context Window (Input) | Varies (GPT-5.1 Instant/Thinking), significant for GPT-5 | 1,048,576 tokens (Pro) | 10,000,000 tokens (Scout) | Up to 200,000 tokens (approx, with extended thinking) |

| Multimodality | GPT-5 offers strong visual perception | Natively multimodal (text, image, video, audio) | Natively multimodal (text, image, video) | Strong text, some image interpretation (implicitly through computer use tasks) |

| Coding Performance | Strong (SWE-bench Verified, Aider Polyglot) | Excellent (LiveCodeBench, Aider Polyglot, complex web apps) | Competitive (Maverick with DeepSeek v3) | Leading (SWE-bench Verified, 77.2%) |

| Prompting Strategy Focus | Clear instructions, leverage “thinking” for complex tasks, customize tone | Structured input for multimodal context, explicit task decomposition for agents | Leverage long context for summarization/analysis, precise multimodal queries | Detailed multi-step instructions for agents, tool use, error handling in prompts |

| Pricing Model (Approx.) | GPT-5.1 API: $3/1M input, $15/1M output (Instant/Thinking) | Gemini 2.5 Pro: $1.25/1M input, $10/1M output (non-caching) | Open-weight (free to use, deployment costs apply) | Sonnet 4.5: $3/1M input, $15/1M output |

| Knowledge Cutoff | Not explicitly stated for 5.1, but GPT-5 is Aug 2025 | Jan 2025 (Pro, Flash, Flash-Lite) | N/A (open-weight, continuously trained on vast data) | N/A (continuously updated knowledge) |

Prompting these models effectively often involves more than just asking a question. For GPT-5.1, explicitly signaling the need for “thinking” or leveraging its customizable conversational styles can significantly improve outcomes. Gemini 2.5 Pro benefits from well-structured multimodal inputs that clearly define the relationships between different data types. Llama 4 Scout, with its vast context window, thrives on comprehensive, detailed inputs that allow it to synthesize information from massive documents. Claude Sonnet 4.5, being highly agentic, responds best to detailed, step-by-step instructions that outline tasks, sub-tasks, and desired tool interactions, especially in coding and computer use scenarios.

Conclusion

The AI landscape of late 2025 is characterized by increasingly sophisticated LLMs, each bringing unique strengths to the table. OpenAI’s GPT-5.1 excels in adaptive reasoning and user personalization, Google’s Gemini 2.5 Pro leads in multimodal understanding and scalable efficiency, Meta’s Llama 4 offers open-weight multimodality with unparalleled context lengths, and Anthropic’s Claude Sonnet 4.5 stands out for its agentic capabilities and precision in complex tasks like coding and computer control.

For practitioners, the key takeaway is that no single model is universally “best.” The optimal choice depends on the specific application, balancing factors like task complexity, required context window, multimodality needs, and cost efficiency. By understanding the distinct architectures and strengths of GPT-5.1, Gemini 2.5 Pro, Llama 4, and Claude Sonnet 4.5, developers and businesses can craft more effective prompts and unlock the full potential of these frontier AI models to drive innovation and solve real-world problems.

- **Explore Model Documentation:** Always refer to the latest official documentation for each model to understand specific API parameters, context limits, and pricing.

- **Experiment with Prompting Techniques:** Practice different prompting strategies, including few-shot prompting, chain-of-thought, and role-playing, to discover what works best for your specific use cases.

- **Leverage Multimodal Inputs:** For models like Gemini and Llama, integrate image, audio, or video inputs where relevant to enrich the context and improve response quality.

- **Consider Agentic Workflows:** For complex, multi-step tasks, investigate the agentic capabilities of models like Claude Sonnet 4.5 and GPT-5.1 Thinking, which are designed for robust execution.

- **Stay Updated:** The AI field is dynamic. Regularly check for new model releases, updates, and research findings to keep your applications at the cutting edge.

The future of AI will undoubtedly see continued convergence and specialization. As these models evolve, the ability to discern their unique strengths and apply targeted prompting strategies will be paramount for maximizing their transformative impact.

Image by: Google DeepMind https://www.pexels.com/@googledeepmind