A 2025 guide comparing OCR and document AI stacks. As of November 2025, DeepSeek-OCR and Cohere’s latest multimodal models (Command A Vision, Aya Vision, Embed v4) redefine how we extract, compress, and reason over documents. This guide compares DeepSeek-OCR vs Cohere for document AI, focusing on architecture, accuracy, efficiency, and deployment, so you can decide which is best for your workflow.

DeepSeek-OCR vs Cohere: what are we really comparing?

Strictly speaking, DeepSeek-OCR is a specialized open-source OCR + visual compression model, while Cohere provides a commercial document AI stack built from multiple models:

- DeepSeek-OCR (released Oct 20, 2025; arXiv:2510.18234) is a vision-language model designed for contexts optical compression. It renders long text as images, encodes them into a tiny number of vision tokens, and decodes back into text.

- Cohere does not ship a single “Cohere OCR” engine. Instead, document AI is built from:

- Command A Vision (

command-a-vision-07-2025) for OCR, charts, tables, and diagrams. - Embed v4.0 (

embed-v4.0) for multimodal embeddings, including PDFs and images. - Rerank v3.5 (

rerank-v3.5) for reordering retrieved chunks. - Command A (

command-a-03-2025) and Command A Reasoning for long-context RAG and agentic workflows.

- Command A Vision (

So when we say “DeepSeek-OCR vs Cohere” in 2025, the practical comparison is:

- DeepSeek-OCR as a high-compression OCR + layout + structured parsing engine you run yourself, often feeding another LLM.

- Cohere’s document AI stack as a managed, end-to-end solution for parsing, searching, and answering questions over PDFs and other documents.

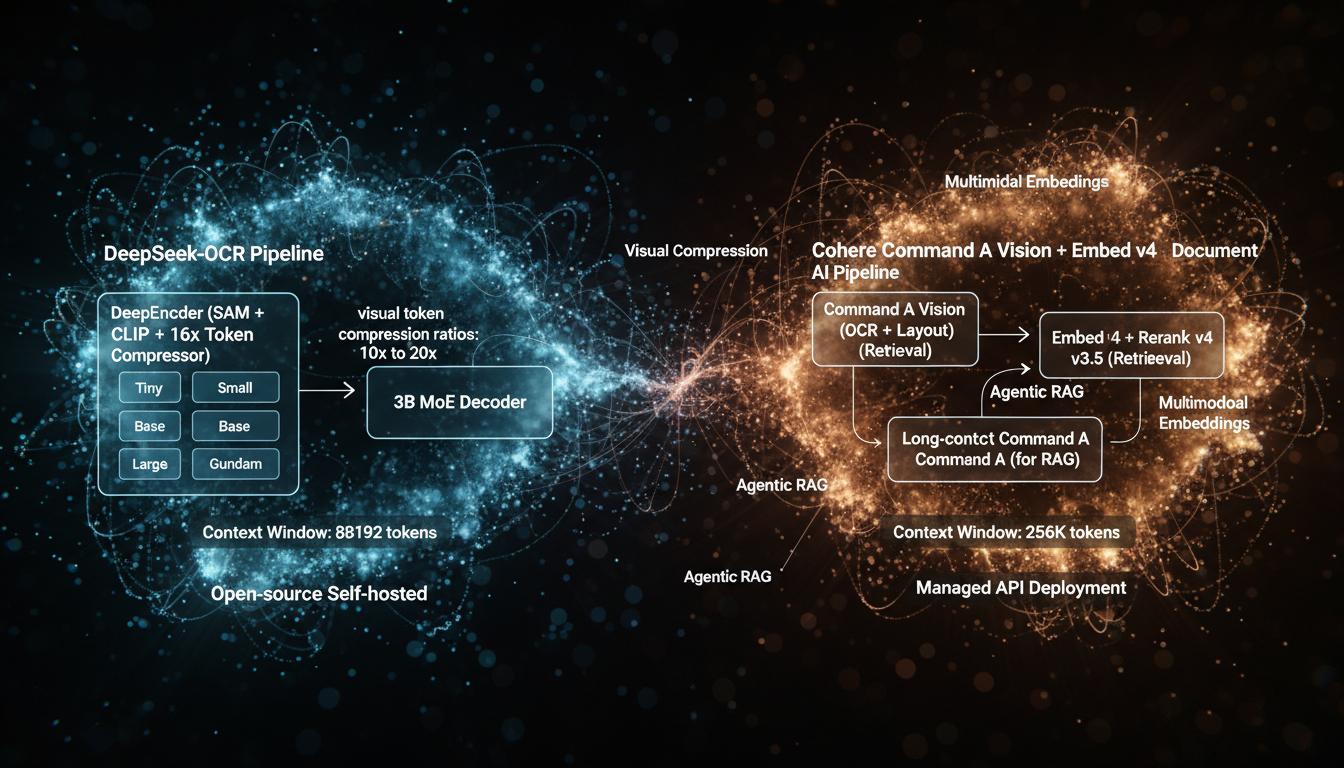

Architectures: visual compression vs multimodal enterprise stack

DeepSeek-OCR architecture (contexts optical compression)

DeepSeek-OCR is built specifically to answer the question: “How many vision tokens do we really need to represent long text?”

- Encoder: DeepEncoder (~380M params)

- Serially connects a SAM-base vision backbone (window attention) and a CLIP-large encoder (global attention).

- Uses a 16× convolutional token compressor between them to shrink patch tokens drastically.

- Supports multiple “native” resolutions:

- Tiny: 512×512 → 64 vision tokens

- Small: 640×640 → 100 vision tokens

- Base: 1024×1024 → 256 tokens

- Large: 1280×1280 → 400 tokens

- Dynamic “Gundam” modes that tile high-res pages while keeping the total tokens under ~800.

- Decoder: DeepSeek-3B-MoE (~570M activated params)

- Mixture-of-experts language decoder that reconstructs text from compressed vision tokens.

- Sequence length: 8192 text tokens for outputs and training.

- Training:

- 70% OCR (30M+ PDF pages, 20M+ scene text images), 20% general vision, 10% text-only.

- Nearly 100 languages for document OCR.

The result: DeepSeek-OCR can decode 600–1300-token English pages with:

- 96–98% OCR precision at ~7–10× compression (e.g. 600–1000 text tokens → 64–100 vision tokens).

- ~60% accuracy at ~20× compression (e.g. 1000+ text tokens rendered into 64 tokens).

Cohere architecture for document AI (Command A Vision + Embed v4)

Cohere’s stack is not an OCR engine alone; it’s a full document AI pipeline built around long-context LLMs and multimodal embeddings, accessed via API:

- Command A Vision (

command-a-vision-07-2025)- Multimodal Command-family model (text + images).

- Context length: 128K tokens, max output 8K.

- Designed for:

- OCR over images/PDF pages.

- Charts, graphs, table understanding.

- Diagram and scene analysis, multilingual image text (EN, PT, IT, FR, DE, ES).

- Embed v4.0 (

embed-v4.0, Apr 2025)- Multimodal embeddings for text, images, and mixed text+image payloads (e.g. PDFs).

- Vector sizes: 256, 512, 1024, 1536 (default) with 128K context.

- State-of-the-art on text–text, text–image, and text–mixed retrieval benchmarks.

- Rerank v3.5 (

rerank-v3.5)- Reorders candidate chunks (up to ~4K tokens each) for better RAG relevance.

- Command A / Command A Reasoning

command-a-03-2025: 111B parameters, 256K context, 8K output.command-a-reasoning-08-2025: reasoning-focused, 256K context, 32K output.- Used as the brain for RAG, agents, and tools, consuming OCR’d text and embeddings.

The Cohere docs and cookbooks show typical document AI flows using:

PDF → parse to text (Google Doc AI / Textract / Unstructured / LlamaParse / Tesseract) → Embed v4 → Rerank → Command A for Q&A. With Command A Vision you can increasingly skip external OCR in favor of direct image/PDF understanding.

Accuracy & capabilities: DeepSeek-OCR vs Cohere

OCR quality and layout understanding

DeepSeek-OCR is benchmarked directly against leading OCR systems on OmniDocBench (CVPR 2025) and Fox:

- On OmniDocBench, DeepSeek-OCR:

- At 100 tokens/page (Small mode), beats GOT-OCR2.0 (256 tokens) and approaches top-tier systems.

- At Gundam mode (~795 tokens), outperforms MinerU 2.0, which uses ~6790 vision tokens/page.

- Handles:

- Rich layouts (books, slides, financial reports, exam papers, magazines, newspapers).

- Tables, formulas, charts (via OCR 2.0 data & deep parsing capabilities).

- ~100 languages, including low-resource scripts, in PDF form.

In short: as of late 2025, DeepSeek-OCR is among the strongest open-source end-to-end document OCR parsers, especially when you factor in its token efficiency.

For Cohere, there is no single public benchmark labeled “Cohere OCR vs DeepSeek-OCR.” Instead:

- Command A Vision is marketed for:

- OCR over scans and PDFs (with chart and table understanding).

- Enterprise workflows like financial reports, invoices, and forms.

- Cohere’s document parsing cookbooks (2024–2025) show:

- Using external OCR/parsers (Google Document AI, AWS Textract, Unstructured, LlamaParse, pytesseract) to get text.

- Then using Embed v4 + Rerank + Command A for search and question answering.

This suggests that, in practice, Cohere focuses on downstream reasoning quality over parsed text rather than squeezing every last percentage point out of the OCR step itself.

Visual compression vs long-context reasoning

- DeepSeek-OCR strengths:

- 10× visual compression at ~97% decoding precision on Fox (600–1000-token documents).

- Works well with small decoders (~570M active params) for cheap inference.

- Can be integrated into ultra-long-context RAG systems by:

- Rendering conversation or corpus into pages.

- Encoding via DeepSeek-OCR.

- Feeding compressed tokens to a downstream LLM.

- Cohere strengths:

- Native 128K–256K context in Command A and Command A Reasoning.

- You can often skip aggressive compression by simply:

- Chunking documents intelligently.

- Using Embed v4 + Rerank for retrieval.

- Letting Command A read large numbers of tokens directly.

- For multi-document Q&A, Command A’s context is usually enough without exotic compression.

So if your bottleneck is GPU memory and context window, DeepSeek-OCR offers a research-grade way to compress. If your bottleneck is developer time and system complexity, Cohere’s long-context models plus embeddings may be simpler and still performant.

Beyond plain OCR: charts, tables, chemistry, and geometry

- DeepSeek-OCR:

- Explicitly trained on:

- Charts → HTML tables (OneChart-style).

- Chemical formulas → SMILES strings.

- Plane geometry → structured dictionary formats.

- Supports “deep parsing”: recursively calling itself to parse embedded figures inside documents (charts, formulas, geometry, images).

- Retains general visual understanding (captions, detection, grounding) thanks to CLIP-based encoder and general vision data.

- Explicitly trained on:

- Cohere:

- Command A Vision is advertised for:

- Charts & graphs analysis.

- Table understanding.

- Document OCR + diagram reasoning.

- Aya Vision (Mar 2025) adds:

- Open-weight multimodal models (8B/32B) for multilingual image+text tasks in 23 languages.

- However, those are general multimodal models, not specialized OCR 2.0 chart/chem/geometry engines with bespoke labels like DeepSeek-OCR.

- Command A Vision is advertised for:

If your use case is STEM-heavy document analysis (equations, chemical structures, complex charts), DeepSeek-OCR’s tailored OCR 2.0 data and deep parsing are a significant advantage. If you primarily need high-level insights and Q&A over documents (even with images), Cohere’s Command A Vision plus Command A Reasoning are strong candidates.

Performance, efficiency, and cost

Token efficiency and throughput

- DeepSeek-OCR:

- On Fox (English docs, 600–1300 tokens), DeepSeek-OCR’s Small mode (100 tokens) yields:

- ~98.5% precision at 600–700 tokens (~6.7× compression).

- ~96.8% precision at 800–1000 tokens (~8.5–9.7× compression).

- ~87–91.5% precision up to 1100+ tokens (~10–12.6× compression).

- On OmniDocBench, Gundam mode (~795 tokens) beats MinerU 2.0 using ~6790 tokens/page.

- Throughput (from the DeepSeek blog):

- Up to 200K pages/day on a single A100-40G.

- ~33M pages/day on 20 nodes (160× A100-40G) in production.

- On Fox (English docs, 600–1300 tokens), DeepSeek-OCR’s Small mode (100 tokens) yields:

- Cohere:

- Command A is optimized for high throughput on 2× A100/H100 with 256K context.

- Embed v4 + Rerank v3.5 are tuned for fast retrieval and re-ranking at scale (tens or hundreds of millions of vectors).

- Token usage is higher than DeepSeek-OCR’s visually compressed representation, but:

- You avoid a separate encode/decode step.

- You gain simpler pipelines and fewer moving parts.

If GPU time is your dominant cost and you process billions of pages, DeepSeek-OCR’s 7–20× token reduction can deliver real savings. For moderate-scale enterprise workloads where engineering cost, SLAs, and managed hosting dominate, Cohere’s simpler long-context approach often wins overall.

Deployment model and operational complexity

- DeepSeek-OCR:

- Open-source (MIT license) on GitHub; weights on Hugging Face.

- You run it yourself via:

- vLLM (

deepseek-ai/DeepSeek-OCRintegration as of Oct 23, 2025). - Transformers (

AutoModel.from_pretrained("deepseek-ai/DeepSeek-OCR")with custominfermethod).

- vLLM (

- Requires CUDA 11.8 + PyTorch 2.6.0; benefits from GPUs (A100, 4090) but can run on CPU (slow).

- You must build:

- PDF rendering.

- Job orchestration & scaling.

- Downstream LLM integration (for search, QA, agents).

- Cohere:

- Managed API with SLAs, SDKs, and enterprise support.

- Models accessible via:

- Cohere’s own API (v2 chat, embed, rerank).

- Cloud marketplaces (Oracle OCI Generative AI, Azure AI Foundry, AWS SageMaker/Bedrock for Embed/Rerank).

- Compatibility API for OpenAI SDKs.

- Cookbooks for end-to-end:

- PDF parsing + RAG.

- Enterprise document parsing flows.

- Agentic workflows (multi-step tools, CSV/invoice extraction).

DeepSeek-OCR is ideal if you want full control, on-prem deployment, and zero per-token license fees, and you’re comfortable operating GPU infrastructure. Cohere is ideal if you want managed document AI with enterprise contracts, support, and multi-cloud integration.

Feature comparison: DeepSeek-OCR vs Cohere document AI stack

| Aspect | DeepSeek-OCR (Oct 2025) | Cohere (Command A Vision + stack, 2025) |

|---|---|---|

| Core role | Open-source OCR + visual compression VLM | Commercial multimodal + RAG + agent platform |

| Release highlights | Model & paper released 2025‑10‑20; vLLM support 2025‑10‑23 | Command A 03‑2025; Aya Vision 03‑2025; Command A Vision 07‑2025; Embed v4 04‑2025 |

| Context / token limits | Decoder context length 8,192 tokens; vision tokens 64–800+ per page | Command A: 256K; Command A Vision: 128K; Embed v4: 128K; Rerank: 4K per doc |

| Compression | 7–10× near-lossless; ~20× still ~60% accurate on Fox benchmark | No explicit compression; relies on large context + embeddings |

| OCR quality | SOTA-level on OmniDocBench among end‑to‑end models with very few tokens | Strong but not benchmarked as a standalone OCR; focus is full-stack QA |

| Layout & structure | Explicit document layout, tables, formulas, charts, geometry | Tables, charts, diagrams supported via Vision models and prompt design |

| Multilingual documents | ~100 languages in PDFs; strong Chinese/English, support for minority languages | Command A Vision + Aya Vision cover 20+ languages for multimodal tasks |

| Deployment | Self-hosted via vLLM / HF; full infra burden on you | Managed SaaS API, plus cloud integrations (OCI, Azure, SageMaker) |

| Licensing & cost | MIT-licensed model; infra cost only, no per-token license | Usage-based API pricing; enterprise contracts; no infra management |

| Best-for use cases | High-volume OCR, training data generation, research on context compression, STEM document parsing | Enterprise RAG over PDFs, agentic workflows, long-context document QA with minimal ops overhead |

Which is best for your document AI in 2025?

Choose DeepSeek-OCR if you:

- Need maximum token efficiency because you:

- Train or serve your own long-context LLMs.

- Operate at massive scale (tens of millions of pages/day).

- Care about open-source, on-prem, or air-gapped deployments with full control.

- Work with complex technical documents (STEM, chemistry, math-heavy PDFs) where:

- Charts → tables, geometry → structured descriptions, and formulas → SMILES or LaTeX really matter.

- Are building a custom pipeline where DeepSeek-OCR is the front-end document encoder feeding your own retrieval and reasoning stack (e.g., DeepSeek-VL2, Qwen2.5-VL, or other LLMs).

Choose Cohere (Command A Vision + stack) if you:

- Want fast time-to-production with:

- Managed APIs.

- Cookbooks for PDF parsing, RAG, and agents.

- Enterprise support and SLAs.

- Are primarily doing document Q&A, summarization, search, and analytics, not building OCR engines.

- Need very long context windows (up to 256K) for multi-document reasoning without integrating custom compression.

- Operate in regulated industries and value:

- Private deployment options (OCI, VPC).

- Compliance and contract-based guarantees.

- Are standardizing on a single vendor for LLMs, embeddings, rerankers, and agents.

Hybrid strategy: best of both worlds

For advanced teams, the most powerful option in 2025 is often a hybrid:

- Use DeepSeek-OCR to compress and parse massive document corpora into structured Markdown/HTML (with tables, formulas, chart tables, etc.).

- Index that text via Cohere Embed v4 and serve RAG with Command A or Command A Reasoning, plus Rerank v3.5.

- For ad-hoc visual questions (e.g., “Explain this chart on page 37”), call Command A Vision directly on the PDF or screenshot.

This way you get DeepSeek-OCR’s token savings and rich parsing plus Cohere’s long-context reasoning and mature enterprise tooling.

Conclusion: DeepSeek-OCR vs Cohere for “best OCR 2025”

As of November 2025, “best OCR 2025” has two distinct winners, depending on what you mean by “best”:

- DeepSeek-OCR is the best choice if your priority is raw OCR accuracy per token, open-source control, and advanced document parsing (charts, formulas, geometry) at extreme scale.

- Cohere’s Command A Vision + Embed v4 stack is the best choice if your priority is end-to-end document AI – parsing, search, RAG, and agents – with minimal infrastructure and strong enterprise support.

For most enterprises starting a document AI initiative in 2025, Cohere will get you from PDFs to production Q&A faster. For research labs, infrastructure providers, and AI-native companies chasing 10–20× context compression or building their own models, DeepSeek-OCR is a breakthrough foundation. The optimal path for many teams is to adopt Cohere for reasoning and experiment with DeepSeek-OCR at the ingestion layer to reduce long-term compute costs and unlock new multimodal capabilities.